IceKube: Finding complex attack paths in Kubernetes clusters

by Mohit Gupta

Kubernetes deployments can become quite complicated, in terms of scale, the number of resources and understanding how different components interact. This poses a considerable challenge when attempting to find privilege escalation paths an attacker could take to compromise the cluster and associated workloads.

This blog post introduces IceKube (https://github.com/WithSecureLabs/IceKube), an open-source tool that enumerates a cluster and generates a graph database of resource configurations and the relationships between different resources. IceKube can then analyse these relationships and identify potential attacks. These can be combined using the graph database to query for routes from inconspicuous low privileged resources to a target using one or more of the 25 attack techniques that are currently implemented within IceKube. This is an approach that was inspired by Bloodhound, which maps attack paths in Active Directory environments.

The Problem

In our engagements, we regularly review clusters to find security misconfigurations that could be used by an attacker. This involves inspecting the configuration of all components to identify potential issues. Once found, we assess the impact and likelihood of exploitation to gauge the associated risks. This process necessitates keeping track of numerous resources to ascertain which ones might be impacted by a discovered vulnerability.

As clusters grow in size and complexity, the effort needed to identify potential attack paths, which often involve the chaining of multiple routes, grows exponentially. Since large clusters may include many Role Based Access Control (RBAC) resources spanning many namespaces, manual testing may miss long and complex attack paths due to the sheer volume and complexity of interconnected issues.

Enter IceKube

IceKube was our solution to the problem. It uses the power of graph databases which allows us to define individual attacks as relationships between Neo4j nodes – not Kubernetes nodes. This enables us to interrogate it on practical attack paths irrespective of the number of hops needed.

IceKube enumerates and retrieves all resources for all API groups within a cluster and stores all this information in a Neo4j graph database (except for secret data from Secret resources, of course). Definitions of how different resources interact supply a basis for relationships within the database. For instance, it identifies connections such as the link between a Pod and its corresponding Service Account, or the association between a RoleBinding and the specified ClusterRole within it. This includes expanding wildcards and parsing RBAC definitions to add relevant relationships to each affected resource.

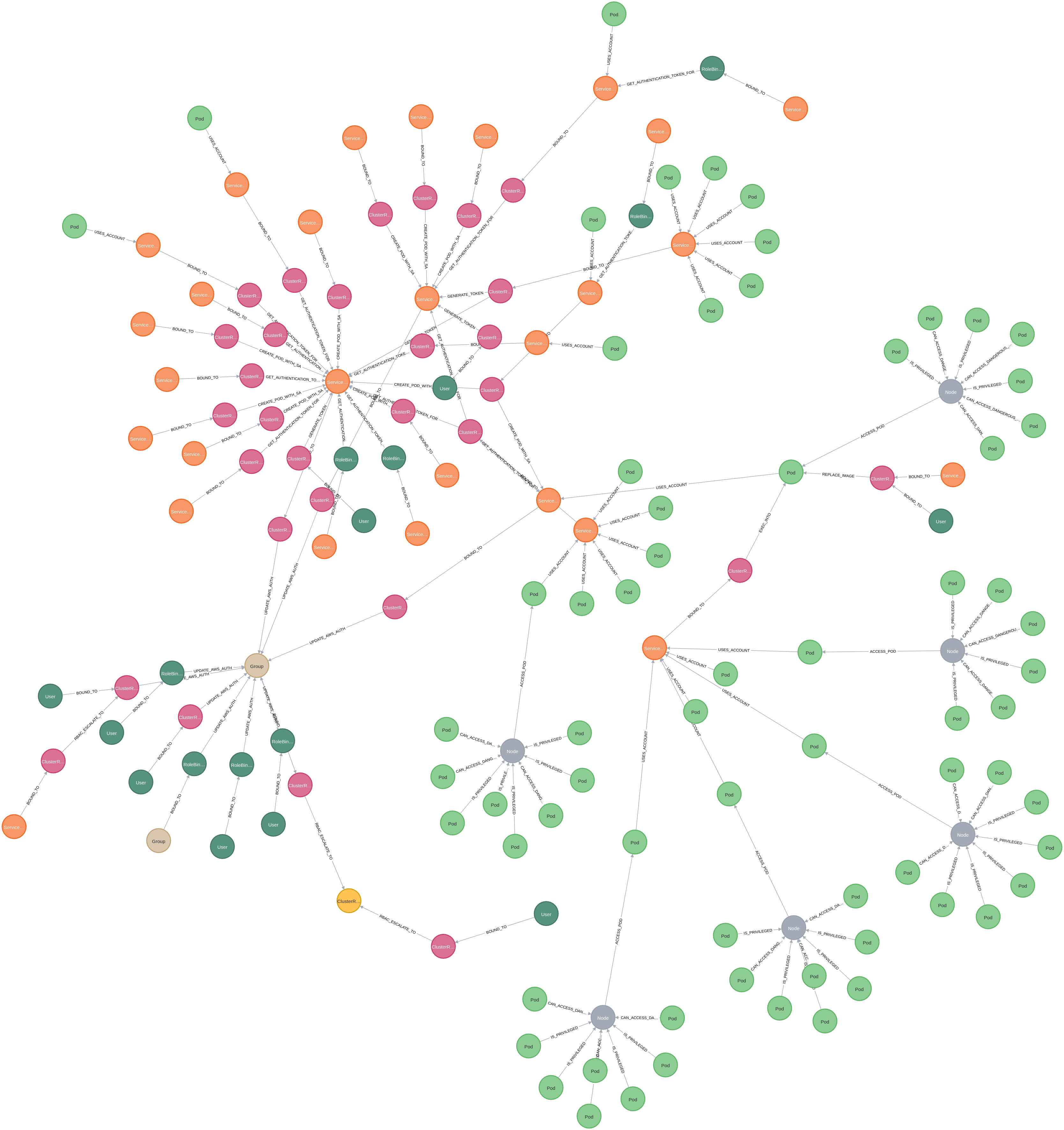

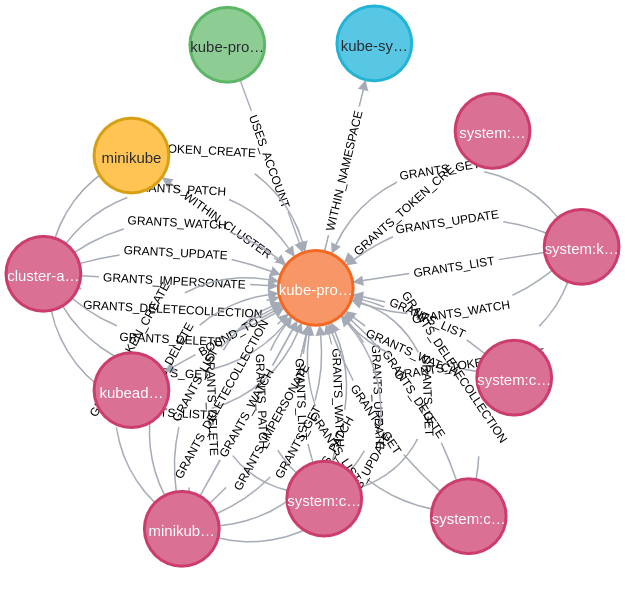

As an example, the above shows a Service Account in orange. The pink nodes around it are the various role bindings that have permissions to it within RBAC, with the lines connecting them showing what permissions they have. For example, GRANTS_UPDATE states it has the update verb on the service account. The green node is a Pod that uses the service account. The yellow node is the cluster the resource belongs to. Finally, the grey node is the namespace it belongs to.

Neo4j now contains resources and relationships that define how resources interact, IceKube then uses a list of attack path definitions and associated queries to insert attack path relationships between nodes. These attack path relationships can vary in complexity: some may be straightforward duplications of existing relationships reinterpreted as possible attack routes, while others involve analysing multiple relationships to identify patterns indicative of potential attacks. Attack path relationships are defined with the attack_path parameter so they can be easily queried for in further queries.

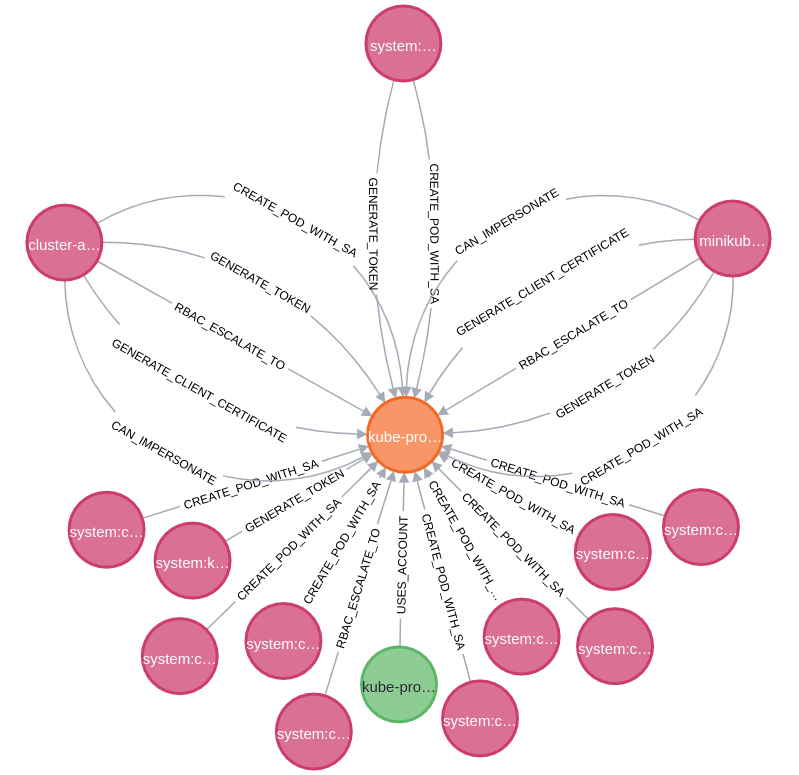

The above shows the same Service Account as earlier, however this time with just the attack path relationships. Looking at the labels on the relationships, it’s possible to get an idea of what type of attack a node can perform. For instance, the minikub... node to the left has four paths to the service account. One of those being GENERATE_TOKEN meaning they can request a new service account token from the API server for the targetted account.

IceKube is designed to keep a distinction between regular relationships and attack path relationships, so we can tweak attack paths throughout the engagement. This may be to remove false positives, or to add new relationships. Keeping the distinction allows us to keep the original set of relationships as a constant definition of the cluster as IceKube observed it which we can revert to when needed.

Real Life Examples

Below we have three examples of how IceKube has helped us in engagements. In each of these examples, consultants needed to query for bespoke relationships that may not have previously been considered and so would customise their queries to their requirements. This has led to new attack paths being added to simplify future work. Note, while the stories are from client engagements, the queries are from a test cluster.

Sensitive Pods

On client engagements we sometimes find highly sensitive information in various places, for example credentials to a sensitive service mounted in a pod. While obtaining this information does not necessarily guarantee a subject the ability to escalate their privileges to cluster administrator, its disclosure could cause critical damage to the client. In such cases, using IceKube allows us to map out every subject which can obtain the information either directly through the RBAC permissions granted to them, or through various escalation paths.

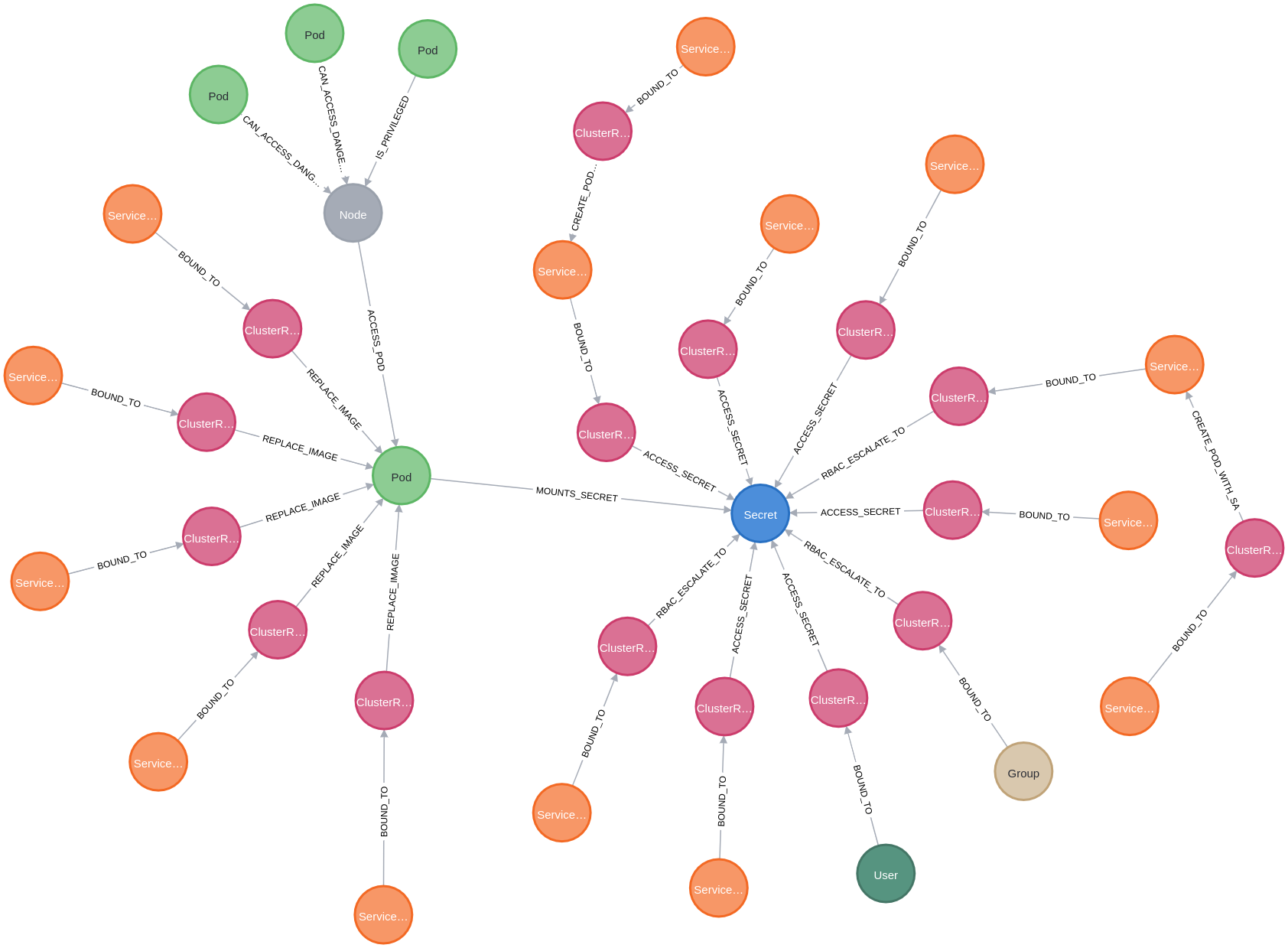

In the following example, credentials to a sensitive service are mounted within a pod, and our goal is to identify all subjects which can reach the secret either through RBAC or the pod.

MATCH p = shortestPath((src)-[*]->(secret:Secret {

name: 'test', namespace: 'default'

}))

WHERE ALL (r IN relationships(p)

WHERE EXISTS (r.attack_path)) AND (src:ServiceAccount OR src:Pod OR src:User OR src:Group)

RETURN p

This query searches for service accounts, pods, users or groups that through various escalation paths can reach the secret named test. To focus the search, the query exclusively examines the attack path layer in the database, reducing the noise from all the relationships which represent everything in the cluster. The result of this query is a graph that distinctly illustrates the resources with direct permission to access the secret through RBAC, the nodes on the right, and the ones that can pivot through a pod, the nodes on the left.

EKS - Modify aws-auth ConfigMap

In AWS EKS, the aws-auth ConfigMap is used to map IAM roles to Kubernetes RBAC users and groups. As such, it allows the API server to enforce authorisation on AWS entities when accessing the cluster. It is automatically created during the EKS cluster creation, is initially configured to allow nodes to join the cluster and can be manually edited to add additional entities. Once an IAM identity is added to the ConfigMap, it will be able to access the cluster using the Kubernetes API with its permissions depending on the mapping created.

Permissions relating to ConfigMaps are usually viewed as being less privileged compared to those of Secrets as typically they don’t contain sensitive information or provide an avenue that might be useful for an attacker apart from maybe disclosing some information. This occasionally leads to administrators being overly permissive granting these permissions and granting a subject the ability to access ConfigMaps at a cluster-wide level thinking it would have a low impact. In EKS clusters, this is more impactful. An attacker with edit privileges could modify the aws-auth ConfigMap and add malicious roles to this configuration granting that role permissions within the cluster, such as system:masters, resulting in full privileges in the cluster.

In IceKube, attack paths are defined with a source and a destination. The source being something an attacker might have, for example access to a pod, and the destination being what they would gain from performing the attack, for example a breakout technique would result in access to the underlying Kubernetes node. Typically, the query directly involves both the source and destination utilising existing relationships between the two as a basis. For the aws-auth ConfigMap attack path, it was slightly different as the destination wasn’t the permissions to edit the ConfigMap or the ConfigMap itself, but the system:masters group which is completely unrelated to the relationships being queried. The query defined in the source code is:

MATCH (src)-[:GRANTS_PATCH|GRANTS_UPDATE]->(:ConfigMap {

name: 'aws-auth', namespace: 'kube-system'

}), (dest:Group {

name: 'system:masters'

})

This query finds all subjects that can patch or update the aws-auth ConfigMap, and informs IceKube to create a relationship between that subject and the system:masters group. As this was added into IceKube after the first few instances of finding this issue, this path would be automatically considered in future queries when identifying what may be able to reach cluster administrator in EKS clusters.

This query finds all subjects that can patch or update the aws-auth ConfigMap, and informs IceKube to create a relationship between that subject and the system:masters group. As this was added into IceKube after the first few instances of finding this issue, this path would be automatically considered in future queries when identifying what may be able to reach cluster administrator in EKS clusters.

AKS – Create AzurePodIdentityException

In Azure AKS, managed identities are used to allow the provisioning of the Azure resources the cluster requires. Two default managed identities are provisioned for the Control Plane and the Kubelet. The Control Plane managed identity is assigned contributor privileges on the nodes’ resource group by default. As such, the Control Plane managed identity can request cluster administrator credentials using the AZ command line.

To prevent pods from being able to abuse this, Azure implemented the NMI (Node Managed Identity) component, which uses iptables rules to intercept communications between pods and the IMDS service and deny non-permitted communications. However, pods which share the underlying’s network configurations, such as privileged pods or those configured with host network, can still communicate with IMDS and abuse the managed identities credentials to obtain cluster administrator privileges. Lastly, Azure implemented the AzurePodIdentityException resource, which allows the exemption of specific pods from the NMI process using labels. As such, subjects which are allowed to create AzurePodIdentityException resources may be able to bypass the restrictions, authenticate to Azure using the Control Plane managed identity, and request cluster administrator credentials.

Demonstration

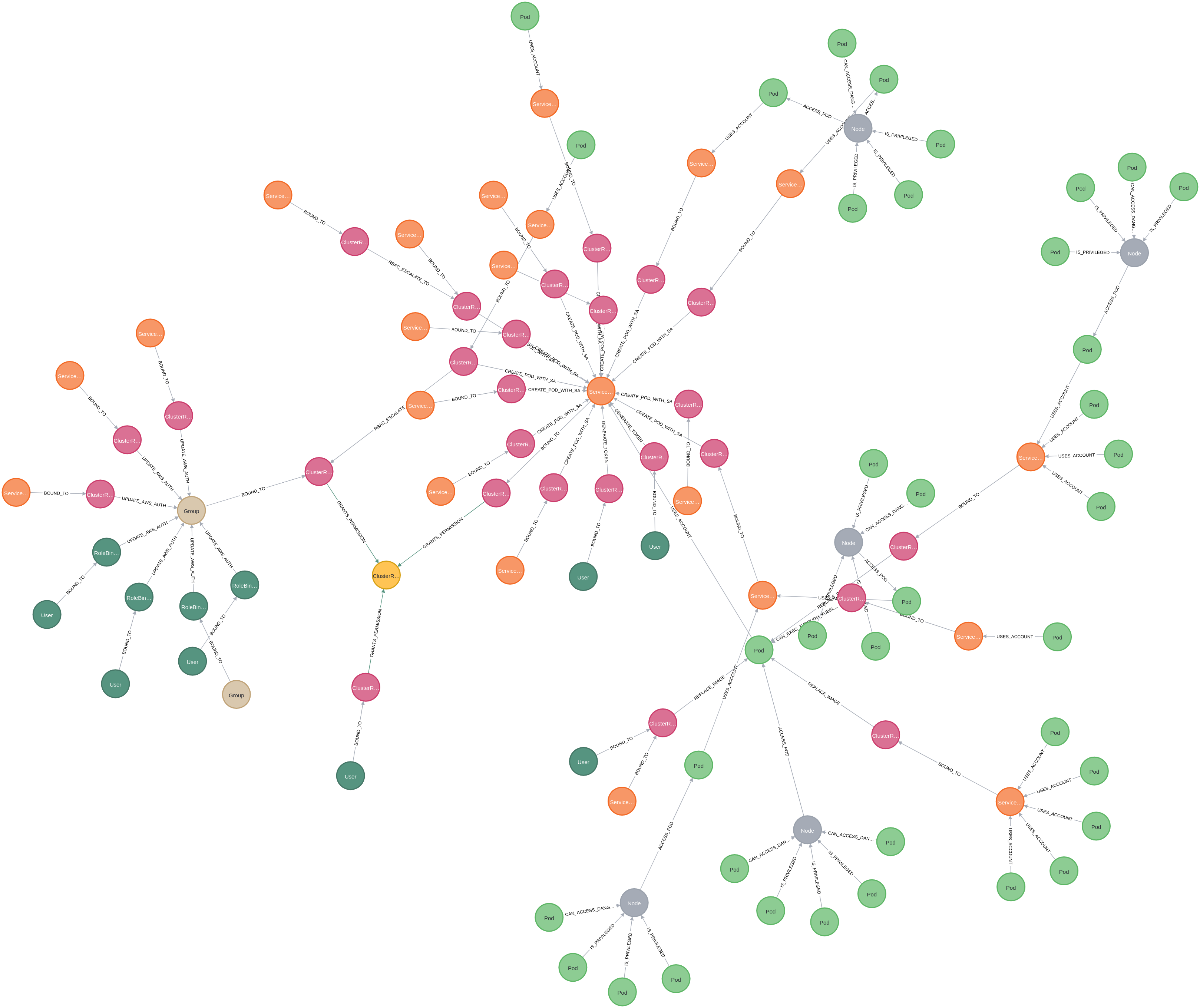

The following video shows how Neo4j can be queried for a route to cluster administrator. This is within a test cluster where a serious of RBAC misconfigurations have been introduced that allows a pod in the alpha namespace to pivot through bravo and charlie, before being able to grant themselves cluster administrator permissions. Finally, a query is made to see occurences of a singular attack path, namely AZURE_POD_IDENTITY_EXCEPTION discussed above. This shows five different cluster role bindings that grant the required permissions to bound subjects for this attack. Whilst some of these are cluster administrator, a couple are not including aks-test-crb.

Conclusion

We hope this post provides a clear understanding of IceKube, our motivation for creating it, and how we use it internally for projects. IceKube is under constant development. This includes enhancing our Neo4j query capabilities, expanding the potential of what can be achieved in a query, and extending the core relationships between nodes to support new attack paths.

We’re always interested in hearing new ideas on how to make this concept better. The best way to start a conversation would likely be through GitHub issues for the repository. The project can be found at https://github.com/WithSecureLabs/IceKube/.

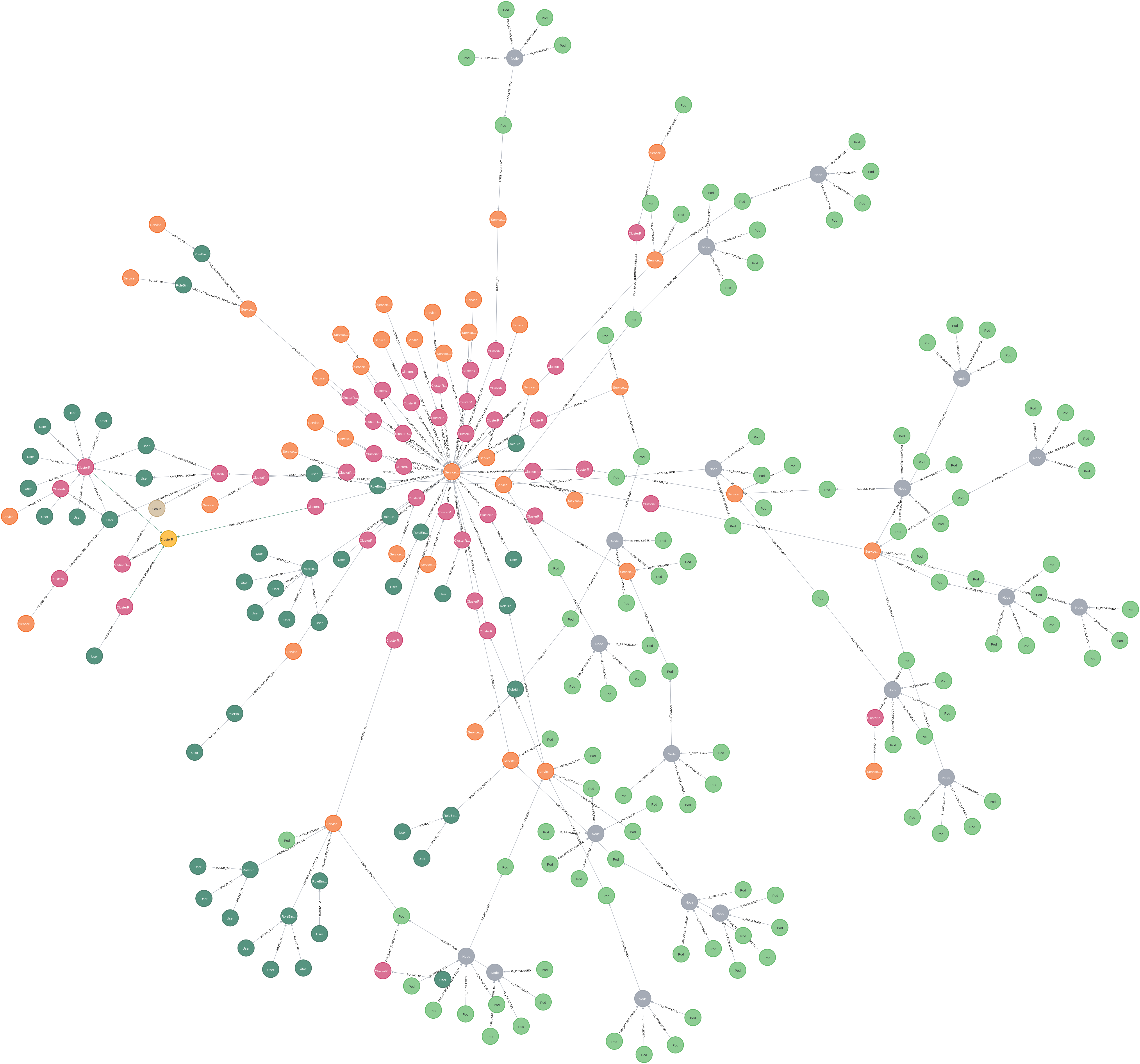

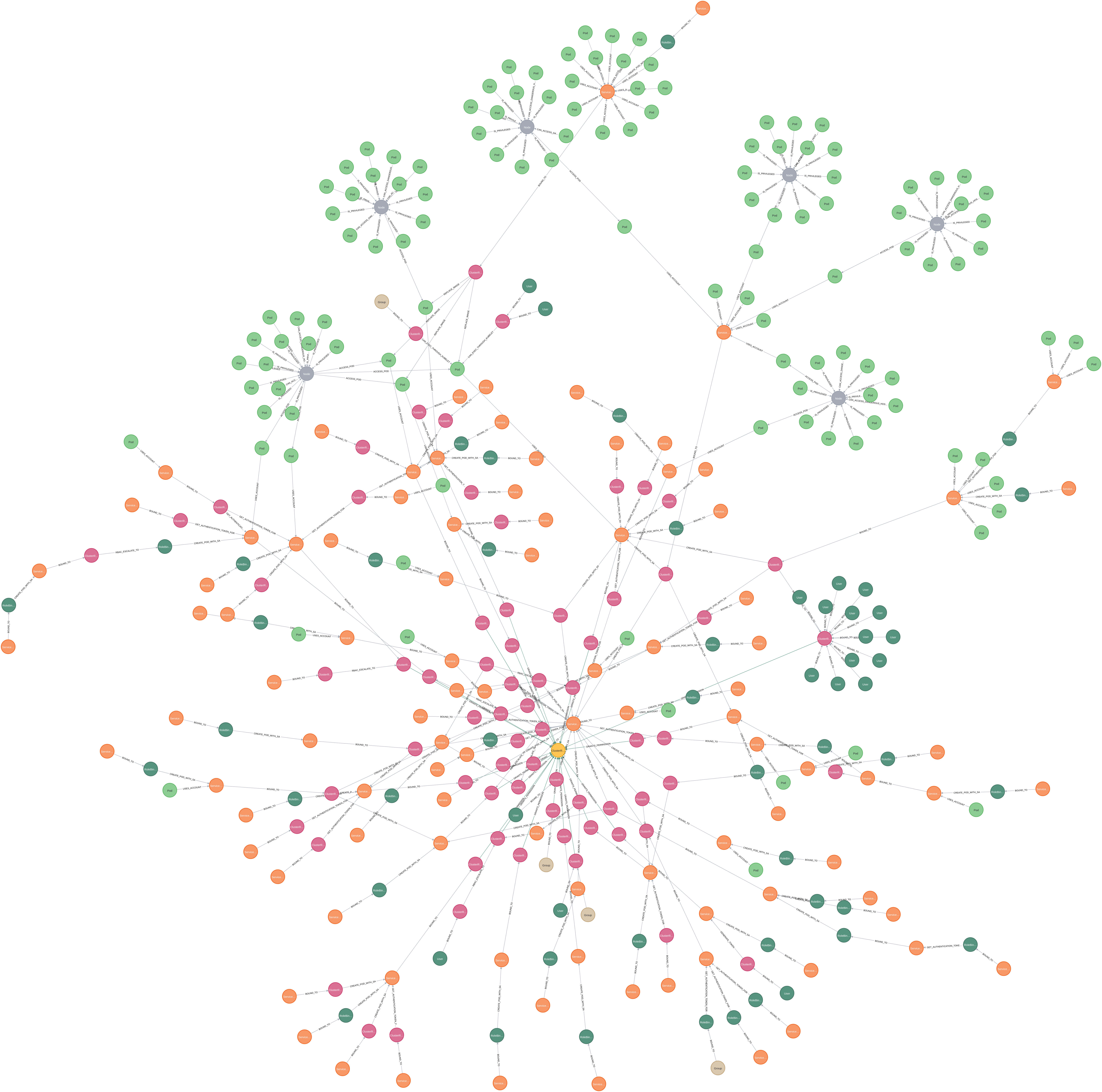

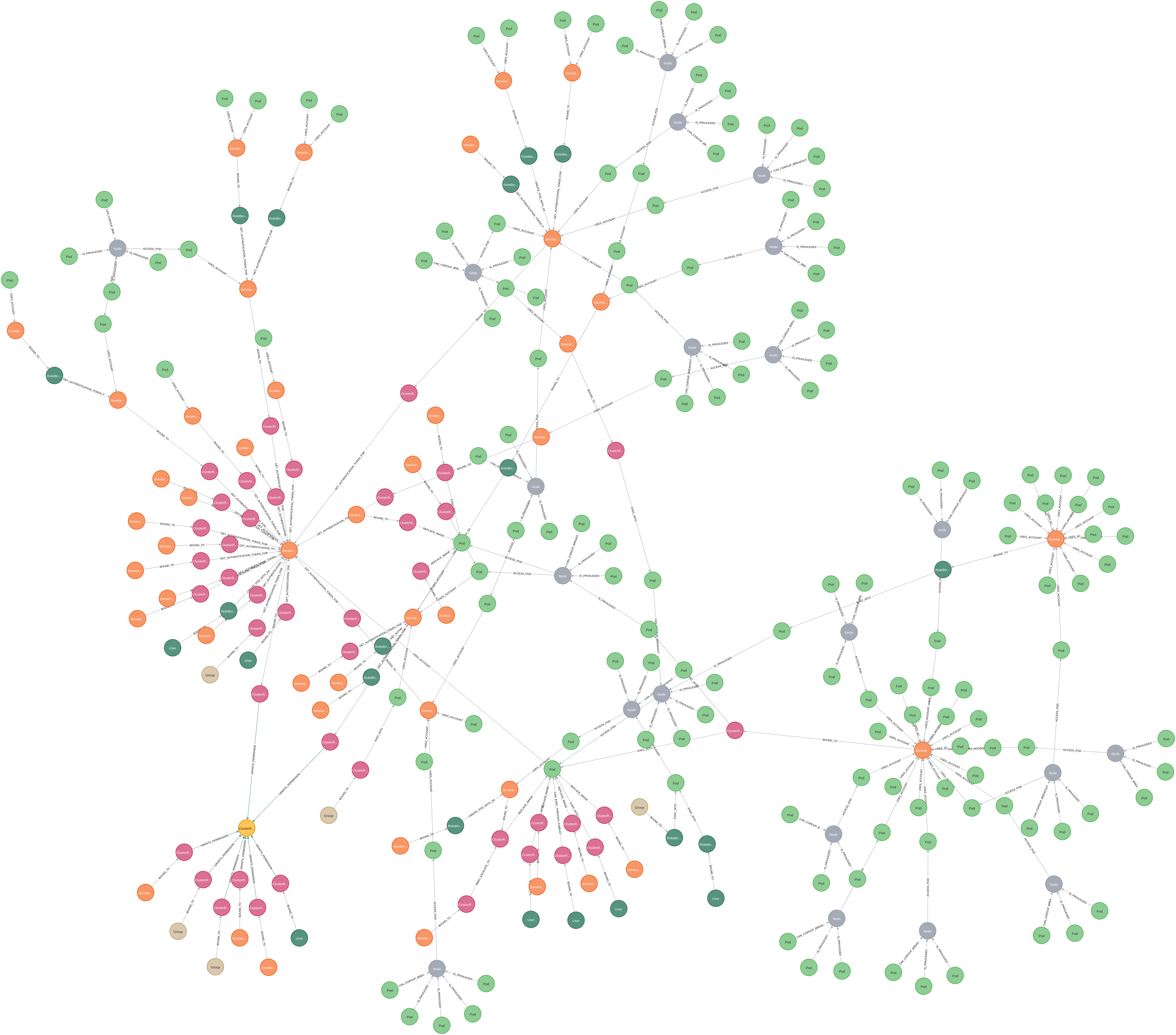

Just as a final word, we thought we’d leave you with some graphs that have been generated from client engagements. These are all graphs where we query for subjects that could reach cluster administrator. The yellow node in each of these graphs is cluster administrator.