Ventures into Hyper-V - Fuzzing hypercalls

on 15 February, 2019

Introduction

Hyper-V is a virtualization platform built by Microsoft from over a decade ago. In recent years it has taken front stage and become core to the Microsoft Azure cloud platform, and introduced a variety of powerful defences to protect standard Windows 10 machine if the Hyper-V features such as Virtualization Based Security (VBS) are enabled. Hyper-V takes advantage of Intel VT and AMD-V instruction set to provide native virtualization, however for consistency the rest of the blogpost will only be referring to Intel VTx.

Hyper-V

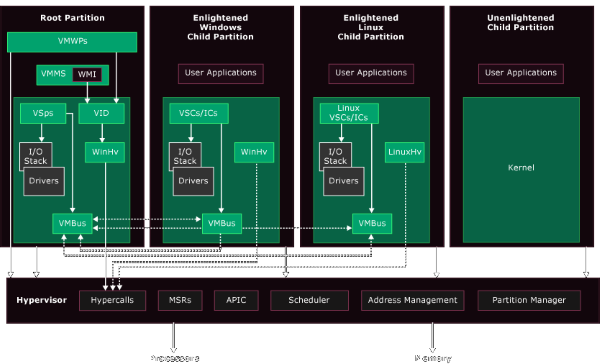

In Hyper-V the main Windows host is known as the root partition, and virtual machines are child partitions such as the ones in Hyper-V snap-in manager. The image below by Microsoft displays the overarching concept [1] which shows:

- Each partition is isolated from another

- The root can create child partitions

- Partitions do not have access to the physical CPU nor handle interrupts, these are handled by the hypervisor

- Use of an IOMMU to remap physical memory addresses to child partition addresses

- Partitions do not have access to other hardware resources, instead are presented with virtual devices

- VMBus handles I/O between virtual components, it is an inter-partition channel

- Virtual devices if aware can take advantage of Enlightened I/O for fast direct VMBus I/O, bypassing any device emulation

For more information the reader is referred to the Top Level Functional Specification (TLFS) [2] by Microsoft describing a large portion of Hyper-V, and looking at the public source code of the Linux Integration Service (LIS) [3] which integrates into Hyper-V.

Address translation

Since this post will be referring to paging, it is worth briefly touching upon how Hyper-V takes advantage of processor features to achieve paging.This posts assumes familiarity with the basic paging principles of PML4, PDPT, PDT, PTE etc which can be found in the Window Internals [4] book.

A processor contains a Translation Lookaside Buffer (TLB) that supports virtual memory to physical address translation, and a TLB cache contains the most recent mappings. When a virtual address (VA) to physical address (PA) translation is required, first the TLB cache is inspected. If nothing is found a page fault occurs and the page table is walked to obtain the mapping, which is then inserted into the TLB.

Virtualization takes this concept further and introduces:

- System Physical Address (SPA) - physical memory address of the machine on which Hyper-V/parent partition runs

- Guest Physical Address (GPA) - physical memory address of the virtual machine

- Guest Virtual Address (GVA) - virtual memory address in the virtual machine associated with a GPA

To maintain GVA -> GPA -> SPA mapping Hyper-V uses a Shadow Page Table (SPT) which is software based that combines the translation into a single table.

Hyper-V further extends upon this by utilizing Intel VT Extended Page Tables (EPT) to provide Second Layer Address Translation (SLAT). This performs the two-layer address translation GVA -> GPA -> SPA directly in hardware and storing the mappings, avoiding the slower shadow software implementation.

Setup

Two laptops were connected via ethernet to enable network debugging since COM serial debugging was too slow, and IP's assigned by Automatic Private Internet Protocol Addressing (APIPA), giving each an IP of the range 169.254.0.0/16.

Three WinDbgs running on the debugger laptop were each talking out of a unique port attached from Laptop_1 to Laptop_2’s Hyper-V, Root kernel partition and Child kernel partition, as seen in the image below.

WinDbg ports used were:

- Hypervisor as 50001

- Root partition as 50002

- Guest partition as 50003

To enable hypervisor debugging on the Windows 10 root partition, in an elevated cmd.exe the following commands were run:

bcdedit /hypervisorsettings net hostip:<laptop_1_debugger_ip> port:50001 key:1.2.3.1

bcdedit /set hypervisordebug on

bcdedit /dbgsettings net hostip:<laptop_1_debugger_ip> port:50002 key:1.2.3.2

bcdedit /set dbgtransport kdnet.dll

bcdedit /debug on

bcdedit /set hypervisorlaunchtype auto

bcdedit /bootdebug on

And for child VM debugging, the following commands were run in the guest:

bcdedit /debug on

bcdedit /dbgsettings net hostip:<laptop_1_debugger_ip> port:50003 key:1.2.3.3

bcdedit /set {bootmgr} displaybootmenu yes

bcdedit /set nointegritychecks on

Hypercalls

Hypercalls are a similar concept to privileged NT systemcalls via x86/x64 SYSCALL and SYSENTER, but instead of a context switching from R3 to R0, you call the instruction VMCALL which stores state for the partition held in a VM Control Structure (VMCS). The hypercall context switches from the child partition to the hypervisor to execute the hypercall code from a dispatch table, and a VMEXIT is then issued to return to the child partition from the hypervisor restoring state from the VMCS.

Hypercalls have to be made from CPL0, i.e. from the kernel, and all pointers to buffers passed into Hypercalls have to be physical addresses. As mentioned in the Introduction the physical address in a VM is a GPA, and that is required for the Hypercall, not the SPA.

Shadow Page Tables (SPT) are a software virtualization implementation that translates GVA -> GPA -> SPA using large TLB caches. When the guest VM modifies a page mapping, the hypervisor must continuously adjust the SPT which becomes costly in CPU cycles for anything even remotely memory intensive, which leads to the single largest performance overhead for virtualization. This is resolved using Second Layer Address Translation (SLAT) which uses EPT to store the address translations, removes the complexity of needing to context switch for VM page faults, and significantly reduces processor/memory overheard. More about Shadow Page Tables can be read in the PhD Efficient Memory Virtualization [5] Section 2.2.2 Hypervisor-enabled Shadow Paging.

Interesting note: now that the reader is aware of virtualization address translations, kernel level malware in a child VM can’t simply flip the SMEP/NX bit on a GVA or GPA page for executable permissions like past malware, as the real address is held by a translation done to an SPA which contains the accurate and untampered page permission. *Not to say the hypervisor can’t be compromised, because if you break out of the child into the root and flip the root SPA permission for your GPA you've got your execution but at that point you have escaped your VM anyway, but the bar has been raised yet again for malware developers*

Virdian Fuzzer

The first way to fuzz documented calls is to use Microsoft's recommended interface from winhv.sys which abstracts away the internals, as a winhv.h header was released in Vista Driver Kit Build 6000, but no libraries to link against. An alternative approach is to use the direct VMCALL instruction, provided the registers are setup and the stack frame is built correctly. Virdian Fuzzer (VIFU) is built using the latter method, it is a kernel driver built by Amardeep @AstralVX to fuzz hypercalls, execute CPUID instructions, read/write to Model Specific Registers (MSR), and Synthetic MSRs which are virtual MSRs in a VM.

Documented hypercalls can be extracted from the TLFS document provided by Microsoft. The TLFS PDF contains a table of documented hypercall codes in Appendix A (0xBC as of revision 5.0C) which contains the information:

- Is repeatable

- Is fast call

- Hypercall name

- Caller types

- Partition privileges required

The python script extract_hypercalls_from_pdf.py was created to parse the TLFS PDF and extract text related to the hypercall table. It was also found that the Adobe PDF file format is a bit strange, whereby there were random non-printable characters all over the place, so the extraction is not perfect. No wonder you can’t copy/paste correctly from PDFs.

The following 64b structure is defined in VIFU for a Hypercall.

#pragma warning(disable:4214)

#pragma warning(disable:4201)

#pragma pack(push)

#pragma pack(push, 1)

//

// As defined in the MS TLFS - the Hypercall 64b value

//

// 63:60|59:48 |47:44|43:32 |31:27|26:17 |16 |15:0

// -----+-------------+-----+---------+-----+------------------+----+---------

// Rsvd |Rep start idx|Rsvd |Rep count|Rsvd |Variable header sz|Fast|Call Code

// 4b |12b |4b |12b |5 b |9b |1b |16b

//

typedef volatile union

{

struct

{

UINT16 callCode : 16;

UINT16 fastCall : 1;

UINT16 variableHeaderSize : 9;

UINT16 rsvd1 : 5;

UINT16 repCnt : 12;

UINT16 rsvd2 : 4;

UINT16 repStartIdx : 12;

UINT16 rsvd3 : 4;

};

UINT64 AsUINT64;

} HV_X64_HYPERCALL_INPUT, *PHV_X64_HYPERCALL_INPUT;

C_ASSERT(sizeof(HV_X64_HYPERCALL_INPUT) == 8);

[..]

#pragma pack(pop)

#pragma warning(default:4201)

#pragma warning(disable:4214)

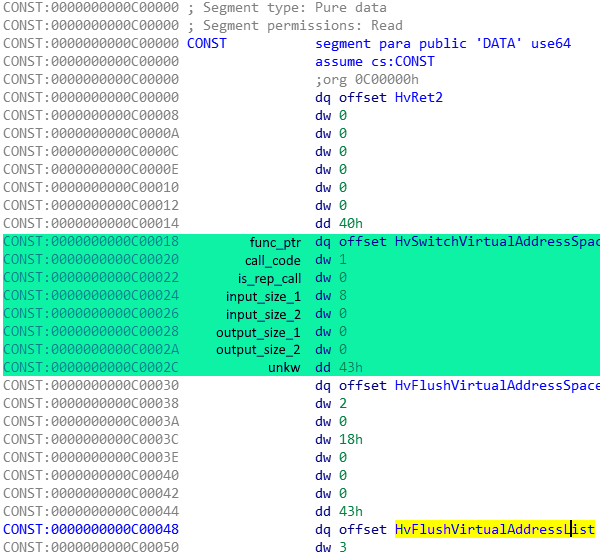

Continuing with research an interesting finding from @gerhart_x was made whereby the hypervisor binary hvix64.exe contained a dispatch table for all hypercalls. Entries to the dispatch table were 24 bytes and the structure can be seen below:

func_ptr : 8

call_code : 2

is_rep_call : 2

input_size_1 : 2

input_size_2 : 2

output_size_1 : 2

output_size_2 : 2

unkw : 4

Combining the knowledge of this hypercall dispatch entry structure and the previously extracted Hypercall names, an IdaPython script extract_vmcall_handler_table_apply_idb.py was created to apply these modifications to the hvix64.exe IDB for easier analysis.

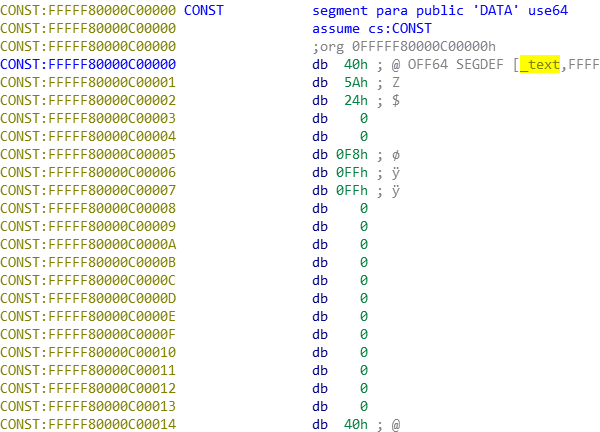

Below is the IDB pre-modification.

Applying the IdaPy modifications to the CONST section, resulted in a more readable section as seen below.

Additionally, the IdaPy script also creates a formatted and valid C header to be imported in the VIFU driver, it contains a snippet of the following header:

HYPERCALL_ENTRY HypercallEntries[] = {

{"HvCallUnmapDevicePages" , 0x0, 0, 0x0, 0x0},

{"HvSwitchVirtualAddressSpace" , 0x1, 0, 0x8, 0x0},

{"HvFlushVirtualAddressSpace" , 0x2, 0, 0x18, 0x0},

{"HvFlushVirtualAddressList" , 0x3, 1, 0x18, 0x0},

{"HvGetLogicalProcessorRunTime" , 0x4, 0, 0x8, 0x20},

{"HvCallUnmapDevicePages" , 0x5, 0, 0x0, 0x0},

{"HvCallUnmapDevicePages" , 0x6, 0, 0x0, 0x0},

{"HvCallUnmapDevicePages" , 0x7, 0, 0x0, 0x0},

{"HvNotifyLongSpinWait" , 0x8, 0, 0x8, 0x0},

{"sub_FFFFF800002BD6A0" , 0x9, 0, 0x8, 0x0},

[..]

The keen reader will notice sub_FFFFF800002BD6A, a hypercall not documented in the TLFS. Interestingly many of these were found, but most required additional partition privileges to successfully call.

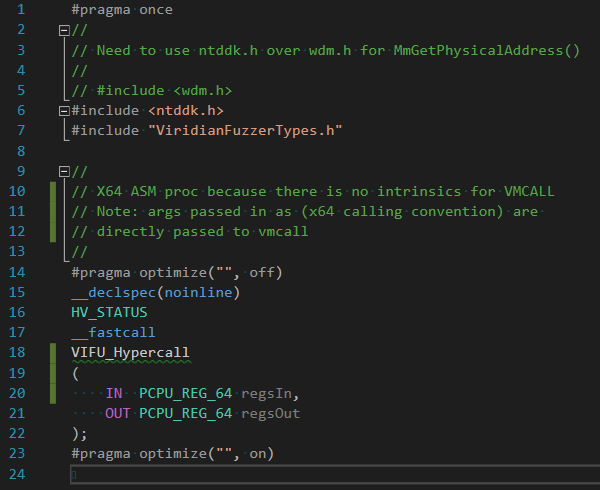

Hypercalls requires either (1) calling an index function pointer in the mapped Hypercall routine page, or (2) manually calling VMCALL from CPL0. Choosing the latter requires setting up a stack frame in a MASM file to setup the register parameters and stack correctly before executing the VMCALL, because there is no compiler intrinsic for VMCALL. The declaration to execute our hypercall caller can be seen below:

The TLFS hypercall conventions were stated as follows:

- Fast register-based hypercall: RCX = callcode, RDX = in GPA, R8 = out GPA

- Non-fast register-based hypercall: RCX = callcode, RDX = in arg, R8 = in arg

- Extended fast register-based hypercall: RCX = callcode, XMM0 to XMM5 used

- Volatile registers: RCX, RDX, R8, R9, R10, R11, and XMM0 - XMM5

- RAX, RDX, R8 always overwritten with hypercall result and output params

Thus, assembly instructions were created as seen below to follow the calling convention specifications.

;

; Hypercall stack frame setup

;

push rsi

push rdi

;

; Stores output PCPU_REG_64

;

push rdx

mov rsi, rcx

;

; Hypercall inputs

; RCX = Hypercall input value

; RDX = Input param GPA

; R8 = Output param GPA

;

mov rcx, qword ptr [rsi+10h]

mov rdx, qword ptr [rsi+18h]

mov r8, qword ptr [rsi+30h]

;

; Fastcall check

;

mov rax, rcx

and ax, 1

movzx eax, ax

cmp eax, 1

jz EXT_HYPERCALL_XMM_SETUP

mov rax, qword ptr [rsi+00h]

mov rbx, qword ptr [rsi+08h]

mov rdi, qword ptr [rsi+28h]

mov r9, qword ptr [rsi+38h]

mov r10, qword ptr [rsi+40h]

mov r11, qword ptr [rsi+48h]

jmp MAKE_VMCALL

;

; Extended fast hypercall

;

EXT_HYPERCALL_XMM_SETUP:

movq xmm0, qword ptr [rsi+50h]

movq xmm1, qword ptr [rsi+60h]

movq xmm2, qword ptr [rsi+70h]

movq xmm3, qword ptr [rsi+80h]

movq xmm4, qword ptr [rsi+90h]

movq xmm5, qword ptr [rsi+0a0h]

MAKE_VMCALL:

int 3

vmcall

;

; Move any output data to RSI which is PCPU_REG_64

;

pop rsi

mov qword ptr [rsi+00h], rax

mov qword ptr [rsi+08h], rbx

mov qword ptr [rsi+10h], rcx

mov qword ptr [rsi+18h], rdx

mov qword ptr [rsi+28h], rdi

mov qword ptr [rsi+30h], r8

mov qword ptr [rsi+38h], r9

mov qword ptr [rsi+40h], r10

mov qword ptr [rsi+48h], r11

pop rdi

pop rsi

;

; RAX from vmcall is return code for our subroutine too

;

ret

The fuzzing code in the driver does not use any existing fuzzing tools such as Radamsa, American Fuzzy Lop (AFL), Peach Fuzzer, honggfuzz, libFuzzer or domato as mentioned by Felix Schmidt from MWR in the post What The Fuzz. This is because the majority of time was spent building the kernel driver to successfully interact with the hypervisor and debugging BSODs, along with the issue that no source code was available, hence this was a purely black box fuzzing solution.

Hypercall fuzzing attempts crossed between usermode and kernelmode via IOCTLs, in a hope to abstract away the hypervisor interface and complexities via UM IOCTLs, however the final code is still very locked to kernel due to mandatory use of nonpaged pool pages. The fuzzer logic followed:

- Iterating through all hypercalls - both documented and undocumented

- Trying both repeatable and non-repeatable flags

- Trying both fast and non-fast flags

- Trying extended registers (XMMn) if fast call set

- Setting bitmasks as the hypervisor utilizes them a lot i.e. 0x8, 0x80, 0x8000000000000000 etc

- Allocating valid GPA's from ExAllocatePool(NonPagedPool, …) with 0x41's and physical pointers to itself, to try and solve pointers and structures within structures, and bypassing early non-NULL pointer checks in hypercall subroutines

A snippet of the kernel code can be seen below where MmGetPhysicalAddress() is used to do the GVA to GPA translation.

Logging

An automated system was setup whereby an elevated BATCH script was attached to a scheduled task to start on every logon. This BATCH script created a service via 'sc create [..]; sc start [..];' which in turn created and loaded the VIFU driver on every logon. VIFU would log the datetime, hypercall and fuzzing attempt to be made to a remote UNC file, to which the logs were appended, and then after execution of the hypercall, the hypercall status code was appended to the logfile. This would repeat until all fuzzing attempts were complete. But if a crash occurred the logfile would not have results written to it, thus during next logon, the previous crash would be marked in the logfile, and the next fuzzing attempt started. The fuzzer typically took a few hours to complete.

BSODs

Overall a few crashes around memory corruption and DOSing were encountered but none appeared to be eligible for the Microsoft's bug bounty program. Fuzzing revealed child partition BSODs at 0x0001, 0x000a, 0x0011, 0x0012, but time spent in Ida and WinDbg was also undertaken to understand HVSTATUS return codes and attempts to bypass checks.

[Self VM DOS] Hypervisor GPA write corruption

Output validation on the GPA was found to be strange, since it would overwrite past any GPA given it had +W permission. It presented lots of BAD_POOL_HEADER corruption BSODs, until it was suspected that Hyper-V does not need to know the child’s memory manager implementation. Whilst Microsoft knows about its own kernel memory manager and could ensure the page to be written too is correct and within bounds, other Hyper-V child VMs such as FreeBSD, Linux, etc, probably aren’t expected to be known, hence Hyper-V relies on the child VM to validate its page ranges it is passing in. When the hypervisor writes data to the passed in GPA, it is generally non-controllable as it is the result, and on failures it returns a buffer of NULLs.

The TLFS rev 5.0c in fact states:

"For hypercalls that have output parameters, the hypervisor will validate that the partition can be write to the output page. This validation consists of two checks: the specified GPA is mapped and the GPA is marked writable. If either of these tests fails, the hypervisor attempts to generate a memory intercept message. If the validation succeeds, the hypervisor “locks” the output GPA for the duration of the operation. Any attempt to remap or unmap this GPA will be deferred until after the hypercall is complete."

This does not break any security boundary, as the output GPA's supplied can only be within the child it originated from, SLAT ensures that. Lastly in Hyper-V there are pages known as overlay pages, APIC pages, HypercallRoutine mapping pages, which are mapped from the hypervisor into the guest, some of these pages were attempted to be written to, but no success.

[Self VM DOS] - HvSwitchVirtualAddressSpace

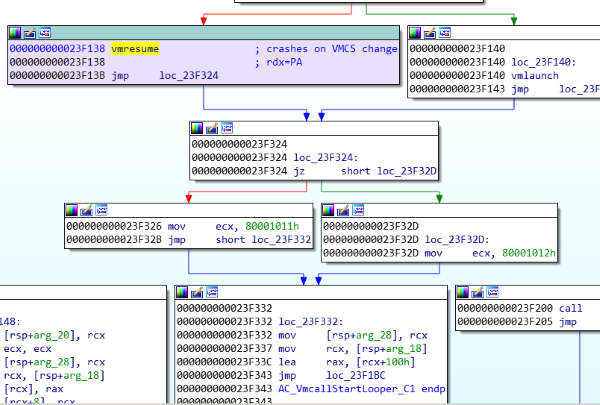

Call code 0x0001 - HvSwitchVirtualAddressSpace needs the partition privilege UseHypercallForAddressSpaceSwitch. On VMWRITE the hypervisor writes a guest supplied GPA into the VM Control Structure (VMCS), and then on VMRESUME, a crash occurs most likely due to the CR3 (PageTable) saved register being overwritten with a pointer to the start of the GPA. A VMCS definition can be seen in the FreeBSD source code for x64 architecture, and the CR3 saved state, along with GDTR, GS_BASE, RSP, RIP and all other registers have their guest state are saved. Thus when the child VMRESUME's it crashes due to a bad Page Table with errors printed in the Hyper-V Windbg, but the root partition and hypervisor survive. Further investigation would need to be done on the guest supplied VMCS and how to abuse it for potential host compromise. The image below shows the VMRESUME which occurs after the VMCALL.

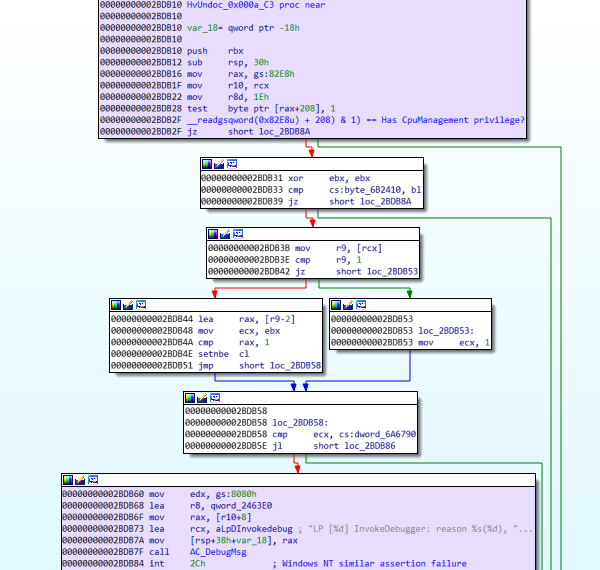

[Hypervisor crash] – Undocumented hypercall 0x000A

Calling the undocumented call code 0x000A results in a hypervisor crash. However, to trigger this requires the CpuManagement partition privilege which can only be given from the root partition to a child, so doesn’t pass the security boundary either.

This appears to be a controlled crash by executing an INT 0x2C, whereby looking at ReactOS shows it is a Windows NT macro for DbgRaiseAssertionFailure.

#define DbgRaiseAssertionFailure __int2c

This callcode 0x000A then appears to be a HvRaiseAssertionFailure() of sorts. Below is the short subroutine for the hypercall at hvix64+BDB2F.

Other

During manual testing, a few times odd BSODs were encountered, most of them non-repeatable, with non-obvious causes.

Conclusion

In conclusion having fuzzed the hypercalls with a custom kernel fuzzer, and reverse engineering the hypervisor binary for hypercalls, there appears to be a fair amount of validation. Memory corruption is difficult as all pages are pinned and copied by the host, no concept of passing handle-like objects around, and hypercalls have very strict rules on what goes in and the lengths, but output appeared to be possibly a bit looser, with potential for info disclosures. From what @long123king a Microsoft MSRC member has mentioned in a tweet they already have their own Hyper-V fuzzer called hyperseed. Hence memory corruption bugs won't be as easy to find as they will have been squashed out over the years by MSRC, but logic bug attacks are more likely to yield better results, such as the VMCS I have yet to research more into, but this comes at an additional price of time in Ida and Windbg and attempting to understand the code and finding niche bugs.

This concludes my research into hypercall fuzzing. As a more experienced driver developer over writing fuzzers, the groundwork has been laid for the Virdian Fuzzer driver, now all that remains is a better fuzzer to be integrated in.

Source code

Further reading

Ring 0 to Ring -1 Attacks | Hyper-V IPC Internals by Alex Ionescu - https://www.alex-ionescu.com/syscan2015.pdf

Hyper-V research by Gerhart - https://hvinternals.blogspot.com/

Windows Server Virtualization & The Windows Hypervisor by Brandon Baker - https://www.blackhat.com/presentations/bh-usa-07/Baker/Presentation/BH07_Baker_WSV_Hypervisor_Security.pdf

Attacking hypervisors through hardware emulation by Intel Security - https://www.troopers.de/downloads/troopers17/TR17_Attacking_hypervisor_through_hardwear_emulation.pdf

hvdgk_2005.h released by the Hypervisor Engineering Team in 2005

References

[1] Hyper-V architecture - https://docs.microsoft.com/en-us/virtualization/hyper-v-on-windows/reference/hyper-v-architecture

[2] Hyper-V Top-Level Functional Specification - https://docs.microsoft.com/en-us/virtualization/hyper-v-on-windows/reference/tlfs

[3] Linux Integration Services for Microsoft Hyper-V - https://github.com/LIS

[4] Windows Internals (book)

[5] Efficient Memory Virtualization - https://research.cs.wisc.edu/multifacet/theses/jayneel_gandhi_phd.pdf