Dangers of a Service as a Principal in AWS Resource-Based Policies

By Tom Taylor-MacLean and Matthew Keogh on 17 Jan 2023

Our research looks into dangers associated with allowing an Amazon Web Services (AWS) service to access resources in your account with no additional restrictions. If controls are set up in this erroneous way, cross-account access may be granted which could give attackers a stealthy route to interact with resources held within your account. This could be possible even if your account is set up with no trust relationships to any other AWS customer accounts. Furthermore, this could affect non-routable resources inside Virtual Private Clouds (VPCs) because the communication comes from internal AWS services.

While on a client engagement reviewing policies attached to particular resources, we came across a resource-based policy which, on first glance, looked reasonably restrictive. However, it turned out to reduce the effectivity of access controls around the client’s AWS account and resources. This gave the potential for malicious actors, especially insider threats, to interact with the client’s infrastructure over the public Internet. After experimenting in our lab environment, we found that this type of misconfiguration is not often recognised by various automated tools and so could often be missed unless policies are manually reviewed.

What Is A Resource-Based Policy?

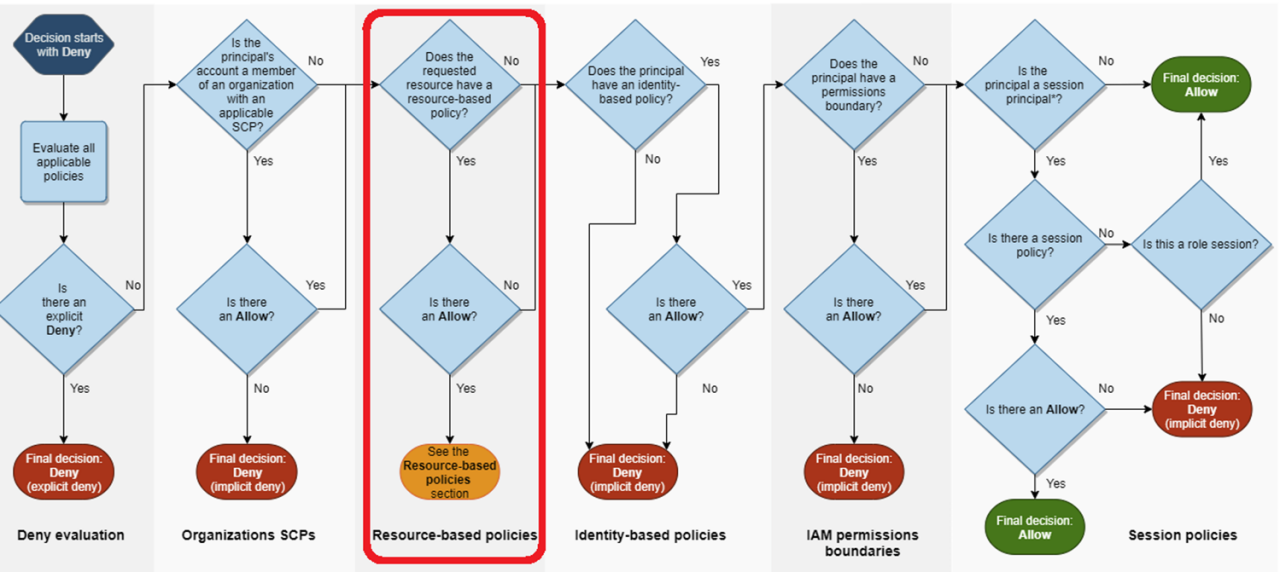

Identity and Access Management (IAM) is implemented by AWS to allow end-users to control access to resources and services within their accounts. It does so by allowing polices to be written which can be attached to entities in the AWS environment. Then, when an action is attempted, a series of checks are completed to assess whether or not the action is permitted by relevant IAM policies. Often, policies are attributed to a certain user or role within the account and these are known as identity-based policies. However, if you want to more finely control who or what has access to a particular resource, e.g. an S3 bucket or a Secret, you could attach a policy to that specific resource instead. This is known as a resource-based policy. Resource-based policies are assessed in the following position within the logic of IAM:

This image shows the IAM Logic Evaluation - from AWS documentation:

https://docs.aws.amazon.com/IAM/latest/UserGuide/reference_policies_evaluation-logic.html#policy-eval-denyallow

AWS supports resource-based policies on many of its core services [1]. These policies help to specify who or what has access to the resource and also restrict the actions that can be performed on it. For example, let's say we have a Simple Notification Service (SNS) topic that we want an S3 bucket to post a message to whenever a file is deleted. Designing this topic in the most secure way, we would use a resource-based policy to ensure only the S3 bucket in question can publish to the topic. The following policy could be used:

{

"Version": "2012-10-17",

"Id": "Policy1656947040400",

"Statement": [

{

"Sid": "Stmt1656947035000",

"Effect": "Allow",

"Principal": {

"AWS": "xxxxxxxx0752"

},

"Action": "sns:Publish",

"Resource": "arn:aws:sns:eu-west-1:xxxxxxxx0752:ec2_sns",

"Condition": {

"ArnEquals": {

"aws:SourceArn": "arn:aws:s3:::testbucket302ui902i0"

}

}

}

]

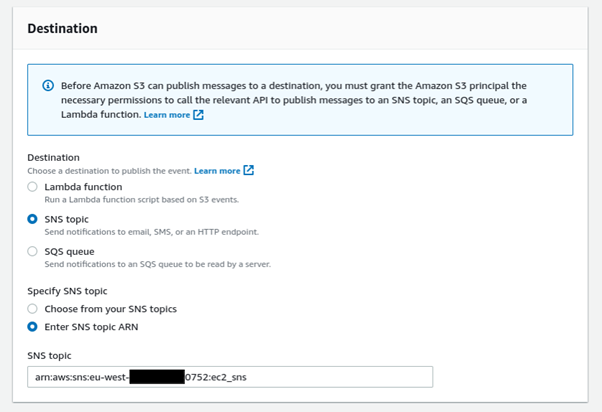

}We set up the event notification on the S3 bucket:

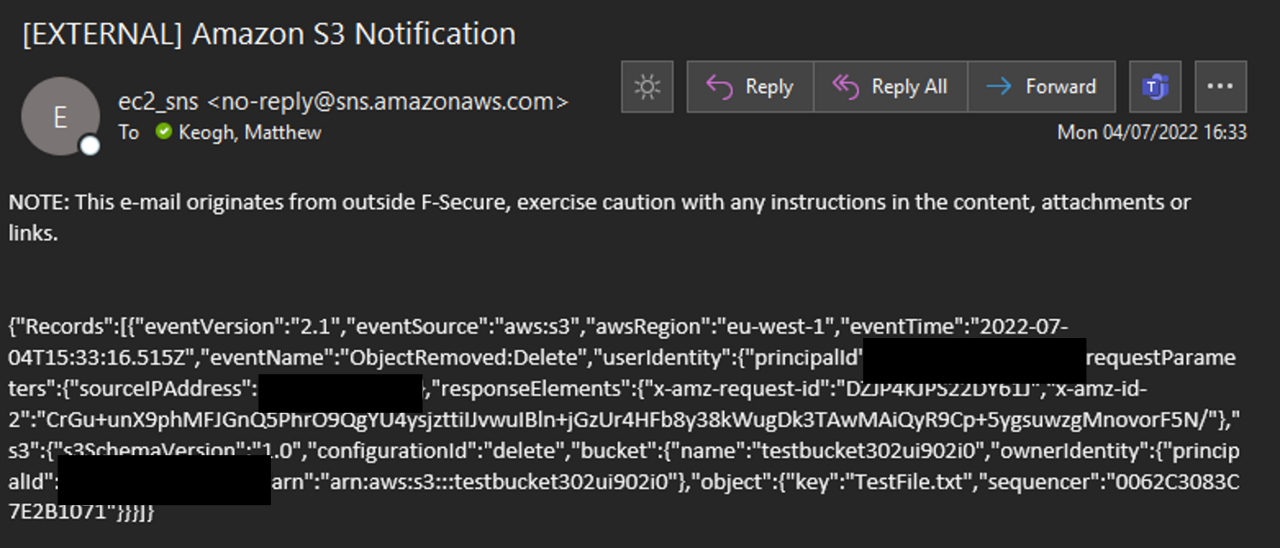

Now whenever a file is deleted from the S3 bucket, anyone who is subscribed to the topic will get an email, text, or other method of notification, depending on delivery configuration. A basic version of this sends a log of the API request that deleted the file to the subscriber, as is shown below:

Why Could This be a Problem?

The scenario described is an “ideal” setup, but let’s imagine you’ve got hundreds of buckets in account A and another several hundred in account B, all of which you want to get a notification for when an object is deleted within one. The policy shown above quickly becomes difficult to manage, as you need an entry for each bucket in each account.

Sometimes this problem is “overcome” by setting the principal to a wildcard (*) or a specific service, making it possible that any call in AWS, or from the specific service , can interact with the SNS topic. An example of one of these policies is given below:

{

"Version": "2012-10-17",

"Id": "123",

"Statement": [

{

"Sid": "234",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": [

"sns:Publish"

],

"Resource": "arn:aws:sns:eu-west-1:xxxxxxxx0752:ec2_sns"

}

]

}Now we are left with a resource-based policy that allows anything in S3 to communicate with the SNS topic. On initial inspection, this works well, as all buckets from account A and account B can now send messages every time something is deleted.

It is worth noting that if we had set the principal to "AWS": "*", any resource or user would be able to send a message to the topic. However, it is already considered bad practice to set a wildcard as a principal and so we focus instead on the more restrictive option of setting a service as a principal.

The Attack

We imagine that a malicious actor has discovered one of our SNS topics which has a resource-based policy that allows communication from S3. This could have been achieved, for example, through enumerating our account, by gaining access to our account through another misconfiguration or by simply using a dictionary attack.

We can send messages to identified SNS topics from any AWS account, including one that we control (provided the origin is S3 in this example). The important thing to take away here is we need no additional permissions in the target account, because we can make the S3 service interact with the topic from an account we control. Within our own account, we can set whatever permissions we need to allow us to set this scenario up.

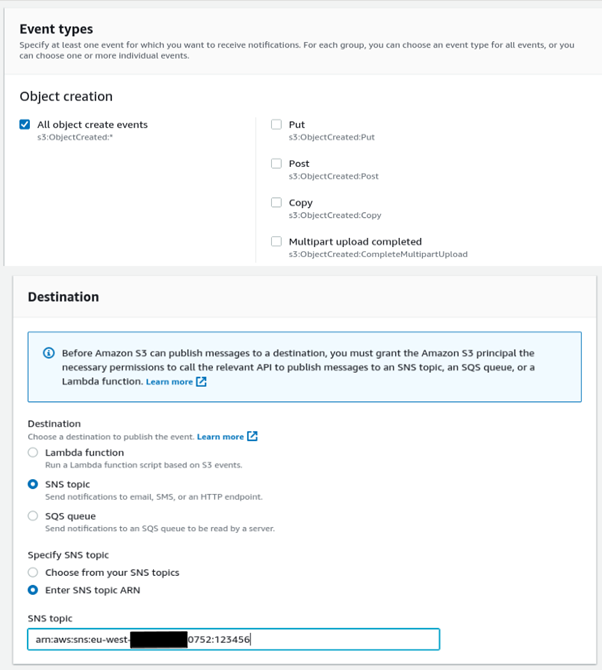

In the adversary-controlled account (1599) we setup an event notification rule on our S3 bucket. We just need to add the correct Amazon Resource Name (ARN) as the destination. The target topic is named 123456 and is in account ending (0752).

Now whenever we upload a file to our own S3 bucket, it triggers the SNS topic in the target account (ending 0752) and any subscriptions to that topic will get a message. Sending messages to SNS topics from different accounts is certainly interesting, but not likely to achieve much unless the adversary is trying to phish someone subscribed to that topic, or just wants to flood the subscribers with messages by uploading hundreds of objects at a time. In some instances, the SNS topic could be part of a workflow which affects the configuration of a web application, or posts results externally and could be used to trigger further vulnerabilities.

AWS are aware of this issue and call this the Cross-Service Confused Deputy problem [2]. Some services include a page about this within their documentation, but many, including Lambda, do not. The number of exploits are limited in that it is only the service which has permissions and we have limited control over what we can induce a service to do. For example, actions made from the terminal of an EC2 instance will not be accepted by a similar resource-based policy as API calls are made under the context of an EC2 instance role, rather than the EC2 service itself.

Attacking Lambda Functions with Permissive Policies

If a Lambda function's resource-based policy is too permissive [3], it may allow an attacker to invoke it from an outside account. Consider the following function, named rbpResearch, and assume that the Lambda has been granted the relevant KMS and Secrets Manager permissions. The function simply attempts to return a particular secret from Secrets Manager.

import boto3

import base64

from botocore.exceptions import ClientError

def get_secret():

secret_name = "arn:aws:secretsmanager:eu-west-1:xxxxxxxx4210:secret:tomTestSecret-yOitnO"

region_name = "eu-west-1"

# Create a Secrets Manager client

session = boto3.session.Session()

client = session.client(

service_name='secretsmanager',

region_name=region_name

)

try:

get_secret_value_response = client.get_secret_value(

SecretId=secret_name

)

except ClientError as e:

else:

if 'SecretString' in get_secret_value_response:

secret = get_secret_value_response['SecretString']

return secret

else:

decoded_binary_secret = base64.b64decode(get_secret_value_response['SecretBinary'])

return decoded_binary_secret

def lambda_handler(event, context):

secret = get_secret()

try:

data = base64.b64decode(event['body'])

resp = {

"context": {

"test": secret,

"data": data

}

}

except:

resp = {

"context": {

"test": secret

}

}

return respIf we attempt to add apigateway.amazonaws.com as a principal in the console to its resource policy, we receive an error which tells us that we must specify either a source ARN or a source account. However, using the command line interface, we may run the following command:

aws lambda add-permission --function-name arn:aws:lambda:eu-west-1:xxxxxxxx4210:function:rbpResearch --statement-id policyTest --action lambda:InvokeFunction --principal "apigateway.amazonaws.com" This successfully adds the vulnerable policy:

{

"Statement": "{\"Sid\":\"policyTest\",\"Effect\":\"Allow\",\"Principal\":{\"Service\":\"apigateway.amazonaws.com\"},\"Action\":\"lambda:InvokeFunction\",\"Resource\":\"arn:aws:lambda:eu-west-1:xxxxxxxx4210:function:rbpResearch\"}"

}

We have chosen API Gateway as our attacking service for three reasons:

- This is a very common integration as API Gateway will often call a backend Lambda function.

- API Gateway does not require the use of a role to make API calls. As soon as a separate role is required, for example EventBridge must make use of a user-created role which performs calls on its behalf, or EC2 where an instance role is used, that role makes the request rather than the service itself. In these cases, the attack fails as the role does not have the correct permissions within the Lambda’s policy.

- API Gateway is flexible in allowing us to send requests and receive responses. We could use GET requests to receive data, or POST requests to send data to the targeted Lambda function.

In our attacker account, we set up an HTTP API, create a stage called stage and a route called test with an integration pointing to the Lambda above.

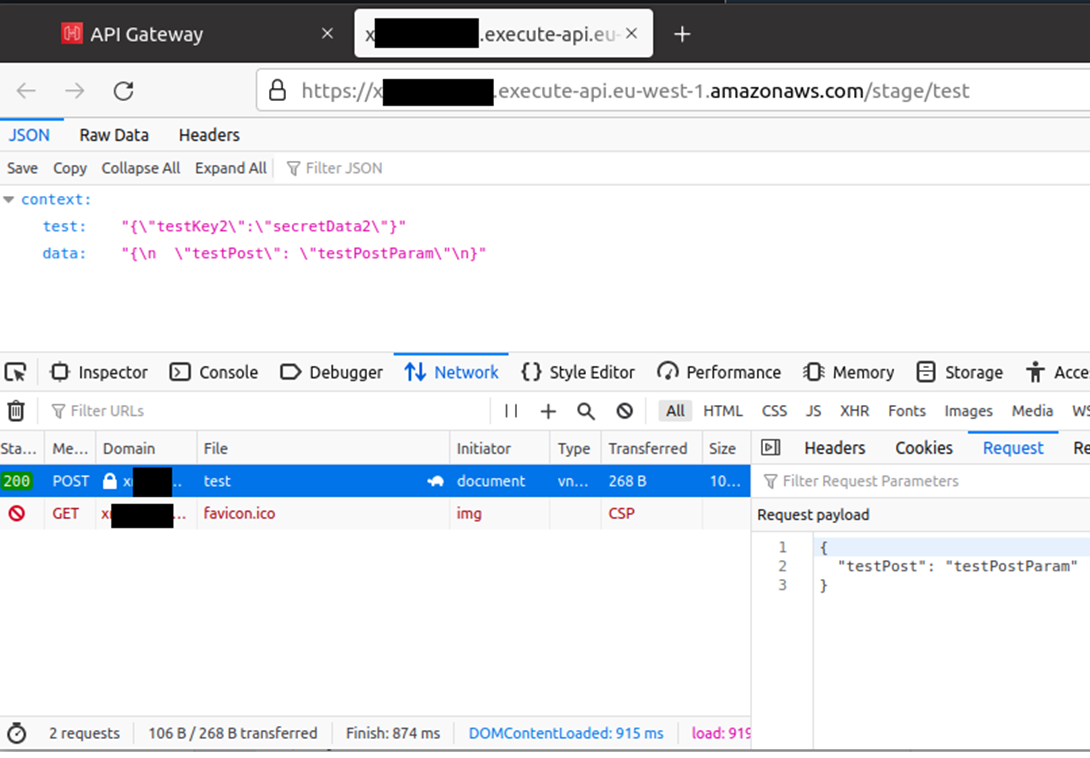

At this point, all we need to do is navigate to the endpoint. Here we demonstrate submitting data through a POST request:

If we are aware of the Lambda function's required inputs, or if it gives verbose error messages which help us to identify how our API Gateway message should be structured, we can create requests to reflect these requirements.

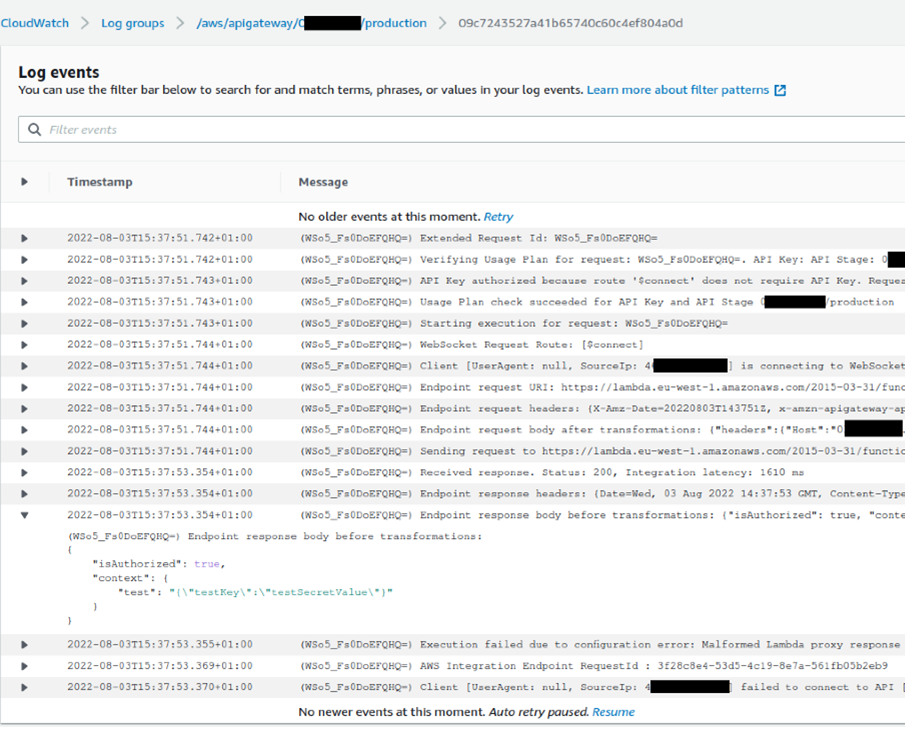

This attack can also work with a WebSockets API. By integrating the Lambda function into the $connect route, then enabling "Log full message data" within the Logs/Tracing section of a stage's options, we can make a call to the API using wscat, then look in our CloudWatch logs to retrieve the Lambda function's output, even if the WebSockets Connection fails.

Importantly, even if your Lambda function is deployed into a private VPC, the attack is still successful as the API Gateway is initially making a call to the public Lambda API.

At the time of writing, AWS do not provide a page discussing the potential for cross-service confused deputy attacks through Lambda.

How Could We Prevent this from Happening?

Care needs to be taken when creating resource-based policies. It’s very easy to make them over-permissive as it can become complicated to get the fine level of access that is required. However, there are a few general rules to keep in mind:

- If the resource will only ever be accessed from the origin account, enforce the principal to be the current account (at minimum). This should be set by default in most environments.

- If multi-account access is required, specify the specific accounts in separate statements within the policy.

- If a service is granted access (e.g. s3.amazonaws.com) always ensure there is an additional condition check on the source ARN and/or source account so you can always ensure you’ll be communicating with the expected resource. An example is given below, based off the originally flawed S3 bucket policy.

{

"Version": "2012-10-17",

"Id": "123",

"Statement": [

{

"Sid": "234",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": [

"sns:Publish"

],

"Resource": "arn:aws:sns:eu-west-1:xxxxxxxx0752:ec2_sns",

"Condition":{

"StringEquals":{

"aws:SourceAccount":"xxxxxxxx0752"

}

}

}

]

}Always ask yourself how permissive a resource-based policy is. These can tend to catch people off-guard as they are lured into a false sense of security that their account is separate from other AWS accounts. However, at the end of the day every AWS account hits the same APIs, just with a different set of parameters.

Related Research

Recently, Datadog released research [4] on a confused deputy vulnerability in AWS AppSync. Though this vulnerability did not use resource-based policies, a similar issue could be seen with the trust policy attached to the role that AppSync assumes.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "appsync.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}Mitigations against cross-account assumption were implemented at the service level. This meant that once Datadog discovered a validation bypass in the service-level logic designed to prevent cross-account role assumption, they were able to use the flaw to assume the role from another account. With the validation bypassed, the trust policy was satisfied as the AppSync service was the entity assuming the role, even though this call was initiated from the attacker’s account.

References:

[1] A complete list of AWS services that support resource based policies: https://docs.aws.amazon.com/IAM/latest/UserGuide/reference_aws-services-that-work-with-iam.html

[2] Cross-Service Confused Deputy Protection: https://docs.aws.amazon.com/IAM/latest/UserGuide/confused-deputy.html#cross-service-confused-deputy-prevention

[3] Lambda Resource-Based Policies: https://docs.aws.amazon.com/lambda/latest/dg/access-control-resource-based.html#permissions-resource-serviceinvoke

[4] A Confused Deputy Vulnerability in AWS AppSync: https://securitylabs.datadoghq.com/articles/appsync-vulnerability-disclosure/