How to attack distributed machine learning via online training

By Alexey Kirichenko, David Karpuk and Samuel Marchal on 6 October, 2020

As in many other domains, Machine Learning (ML) techniques, which power a large share of modern Artificial Intelligence (AI) systems, were originally designed to be used in benign and controlled environments. That assumption, unfortunately, does not hold in numerous practical applications of ML models today.

There are many ways to attack ML-based systems, both at training and inference time, and their dependence on data, often coming from uncontrolled environments, significantly broadens their attack surface. In particular, model poisoning, or data poisoning, is an important class of training-time attacks on ML-based systems wherein an attacker injects mislabelled or mis-distributed data into the training process in order to degrade model performance. Among the multiple classes of attacks available in the context of ML, data poisoning stands out as the most serious due to its ability to introduce long-lasting flaws in the targeted model that affect all users and that cannot be fixed without retraining the model on clean data. These threats are inherent to the learning process itself.

While injecting data points of their choice is not always possible or affordable for attackers, there are many public-facing systems that provide adversaries with a rich set of poisoning opportunities. A good example of such systems are those with underlying ML models trained online and using distributed training data. This essentially means that their training data comes from multiple sources owned by multiple parties. In this group for instance are; search engines, recommender systems, intrusion and malware detection services and social media chatbots.

A number of poisoning attacks against such systems have been proposed and studied, and some interesting theoretical results have been reported. Nevertheless, there have been almost no high-profile media stories about major incidents related to data poisoning[1]. Generally speaking, the level of concern among organizations running services based on ML models susceptible to poisoning attacks, policy-makers and the general public (including users of those services) is quite low. This is perhaps unsurprising - analogous examples in the cybersecurity field have proven that, unless an actual devastating security incident takes place or a fully practical and dangerous attack is demonstrated, the industry – and society in general – are slow and unwilling to invest time and effort into fixing vulnerabilities and building better defences.

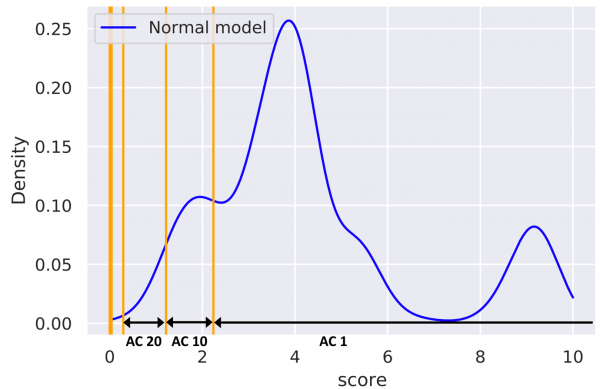

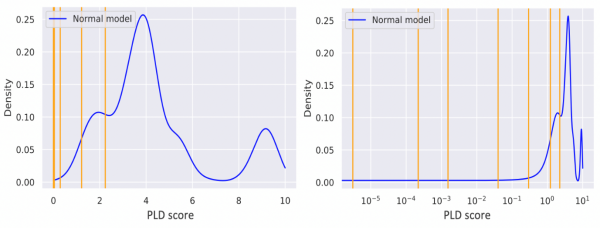

The SHERPA project aims to increase awareness about the problems and risks associated with the development and use of ML-based systems. One of our main project goals is to emphasize the importance of reliable and attack-resilient AI. To construct practical attacks and assess their impact, one has to select a sufficiently specific attack target, preferably representing a wider class of systems used in real-life applications. We chose to study attacks against an ML-based anomaly detection system, trained in an online fashion using distributed data, which could be deployed for cybersecurity purposes. The type of model studied belongs to a class of models named statistical distribution with thresholds. These models leverage a score function for quantifying events and build a distribution of score values for common events. The built distribution is then used to define thresholds that separate common events from rare events. In the following figure we can observe thresholds (orange lines) that separate dense areas of the distribution having a high score from sparser areas having lower scores. The area between two thresholds forms a bin corresponding to a specific anomaly category.

While simple, models of this type can be applied to many use cases and are easy to understand. Consequently, they are widely used in cybersecurity and many other domains. Based on the analysis of our experiments, we propose defence approaches to be studied further, and included in SHERPA’s recommendations.

Distributed and federated learning

Distributed learning refers to a machine learning process which is distributed among multiple data owners, or clients, and coordinated by a central entity called an aggregator. Clients and the aggregator collaborate to train a common global ML model based on all available training data. There are multiple ways to carry out distributed training, and we present here two approaches which are the most relevant for our study.

The first approach is called data aggregation - training data is distributed among multiple clients, and model training operations are fully carried out by an aggregator. Local datasets are submitted by each client and aggregated into a global dataset. The aggregator then uses the global dataset to train a global model.

An alternative approach is federated learning, which delegates parts of the training process to clients. Instead of providing their local datasets, each client uses locally collected data to train a local model. These local models are sent to an aggregator, which combines the local models into a global model. Federated learning has a number of advantages over data aggregation, including communication efficiency (local models are typically much smaller than the datasets they are trained on), and privacy-preservation (potentially sensitive data owned by the clients does not need to be shared with any other parties or transmitted over the network). Federated learning also has the benefit of offloading some of the computation to the clients, which can be desirable when the client devices have unused processing power (as is often the case for modern computers and mobile devices).

The anomaly detection model

The anomaly detection model we chose to attack is one that is designed to detect anomalous process launch events on a computer system. In the computer security domain, benign events are much more frequent than events connected with attacks or malicious activity. By collecting a distribution of events observed on multiple computers, one or several threshold values can be assigned which separate normal events, where the distribution is dense, from anomalous events, where the distribution is sparse. At the inference time, when new events are observed, process launch distribution (PLD) score values can be computed and compared to threshold values in order to determine how anomalous a specific event is.

Operations in computing systems are carried out by processes. Processes often launch other processes – for example, a web browser may start a PDF reader to open a PDF file found on the Internet. An action of a parent process starting, or launching, a child process is called a process creation event. Analysis of historical attack data shows that the most reliable signs of attack occur when common processes are used in anomalous ways, i.e. when a common parent process starts a common child process, but their ordered pair is rare. For instance, winword.exe often starts other processes and cmd.exe is often started by other processes, but it is very unusual to see winword.exe starting cmd.exe (such an event is a strong signal of a spear-phishing attack). It is, however, undesirable to raise a security alert if one of the processes in an observed pair is rare, since rare, benign processes are launched on computer systems when things like updates and software installers are executed.

Based on the above observations, a statistical distribution model can be designed and trained to detect suspicious process creation events. Such a model should consider the following three heuristics:

- How common the child process is, i.e., how often it is started by other processes

- How common the parent process is, i.e., how often it starts other processes

- How common the process creation (parent, child) ordered pair is.

In order to create a model based on the above, we chose a score function based on the pointwise mutual information (PMI) between two processes. Given a parent process starting a child process , the PMI for this process creation event is given by the following equation (where p denotes probability).

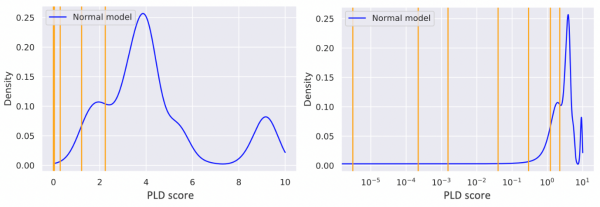

Applying this formula to observed data results in anomaly scores that are always positive. The lower the score is, i.e. the closer it is to zero, the more anomalous the process creation event is, and thus the more suspicious it is. A global score distribution model is created by collecting and analysing process launches on multiple computers. Since our goal was to identify how anomalous process creation events are with respect to our model, we defined several threshold values corresponding to exponentially lower percentiles of the cumulative distribution. The first threshold separates the 10th percentile of our cumulative distribution – 90% of all events to the right of this value are assigned the highest score, which we designate anomaly category 1. The second threshold separates the 1st percentile, and thus process creation events with a score above this threshold but below the first threshold are designated to anomaly category 10, and so on. The higher the anomaly category score, the more suspicious a process creation event is. The following figure depicts an example of a computed score distribution together with the thresholds inferred from it.

Density distribution of anomaly score (blue curve) and thresholds inferred from it (orange lines). Left: linear scale. Right: log scale.

Distributed online training of the anomaly detection model

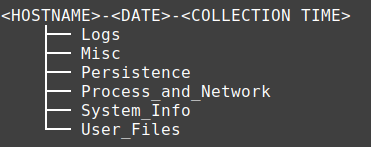

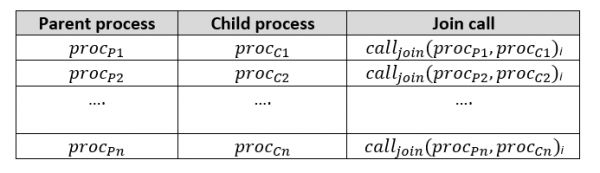

The anomaly detection model is based on an aggregated table containing a count of each unique parent-child process pair that occurred on the system. Each client maintains such a table (below), which is their local model.

Local client model

Clients send their local models, at regular time intervals, to an aggregator. The aggregator then combines them into a global model, which is pushed out to every client. The global model is thus a table containing all the process creation pairs that occurred on all contributing clients.

Model poisoning in online distributed training

In our experiments, we consider the adversary to be in control of a single client that is able to submit one local model for aggregation. The adversary has found a vulnerability and can compromise a common process on a victim’s machine. The adversary intends to perform malicious actions by using the compromised process to launch a specific child process. The compromised parent process is common, meaning that it often launches other child processes, and the process the attacker wants to launch is a common child process, meaning that it is often launched by other processes. However, the compromised process usually does not launch the target child process. Thus, the anomaly category for the parent-child pair is high, and will be considered suspicious if launched on a machine protected by the anomaly detection model.

Our experiments assume that the adversary has gained understanding into how the anomaly detection system works (by studying the contents of the local model on the compromised system). In order for the adversary’s actions to go undetected, they must poison the global model such that the desired process creation pair is no longer categorized as an anomaly by any machine on the network. The adversary must thus craft an attack that will poison the global model during the next model update cycle by making modifications to their local model. Changes should be minimal in order to maintain stealth (if a poisoning attack requires significant modifications to the local model, it may be identified as anomalous by the aggregator and thus blocked). The adversary performs this attack by adding or deleting rows or changing values in the local model’s table.

Experimental setup

We used a real-world setup with 247 clients collaborating to train a global model in an online distributed manner. Each client recorded 24 hours’ worth of process creation events in their local model, which was then sent to an aggregator and combined into a global model. The trained global model was then sent back to each of the 247 clients participating in the training.

After one cycle, the global model had received a total of 22,478,835 process launch events from all clients, divided into 41,975 distinct process creation pairs. On average, each client reported 91,007 events, but numbers varied significantly – one client reported 5,610,402 launches, roughly one quarter of the total volume. Note that it is normal for the volume of process launch events reported by clients to vary significantly – each machine on a network is operated by a different individual. High numbers of process launch events can occur, for instance, in software development environments where compilation jobs launch thousands of short-lived processes. Discrepancies between reported event volumes from different clients do not affect the efficacy of the model.

Attack 1: Increase target process creation count

In order to decrease the anomaly category of a parent-child pair, we can increase its score in the model. Given that we know a parent-child pair count and its corresponding anomaly score in the global model, we can compute a target count value that, when injected into our local model, will poison the global model, causing the pair’s score to fall into a new anomaly category. Details on how these values were calculated are found in the full report. Since the attacker wouldn’t know the count values, other local models would contribute to the global model during the next update step; we experimented with heuristically increasing the calculated value by 20%.

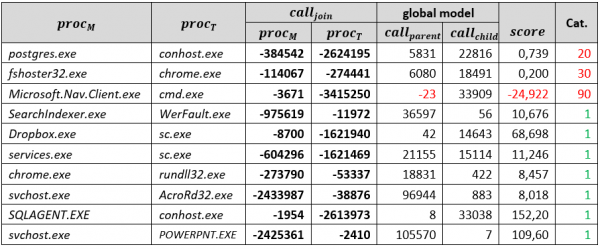

To evaluate this attack, we randomly selected a few parent-child pairs representing anomalous process creation events in the global model that were rarely observed, had low scores, and were consequently assigned high-index anomaly categories (below).

10 randomly selected anomalous process launches with their statistics in the global model

The following table shows an example of results of this attack on the selected parent-child pairs, including the computed values, their 20% increased versions, resulting scores, and the anomaly categories that were obtained when targeting anomaly category 1.

‘increase process creation count’ poisoning attack results

Overall, this attack method succeeded in around one half of cases when the exact values computed were inserted into the poisoned local model. The attack always succeeded when inserting 20% increased parent-child pair values. The values we needed to inject varied significantly. Predictably, reaching a lower anomaly category (e.g., 1 vs. 10) required us to insert higher values. When either the parent process or the child process was not prevalent (values in the range of tens of thousands), the adversary needed only inject a few hundred or thousand process creation events in order to succeed. Such an operation can be considered stealthy, since we observed that a single client, on average, records 90,000 process launches in its local model.

Attack 2: Decrease parent or child process creation count

Our second attack was based on decreasing values of either parent or child counts in a local model in order to increase the score for that pair. This attack is challenging since the adversary cannot prevent benign clients from reporting their own process launch counts. In order to perform this attack, we inserted negative values into a compromised local model. We injected both child and parent counts into the local model’s table, in order to increase the effectiveness of the attack and better simulate what real-life adversaries would likely do. Unlike the previous attack, we did not apply any adjustments to our calculated values to account for the lack of our knowledge about benign client contributions, since results showed that such adjustments had no effect.

The following table shows an example of the results of these experiments.

‘decrease parent or child process creation count’ poisoning attack results

This attack method succeeded in 70% of cases when targeting anomaly category 1 and in 80% of cases where anomaly category 20 was targeted. Unfortunately, in order for this attack to succeed, both child and parent counts had to be dramatically decreased, sometimes by several orders of magnitude. Calculating the correct values to perform this attack was problematic, leading to the attack either failing (not increasing the score sufficiently) or overperforming (increasing it too much). In some cases, the score was over-adjusted to a negative value, leading it to be assigned the highest anomaly category (90). Since the attack required us to inject negative numbers into a local model, it would be trivial to detect and discard such a poisoned model during the aggregation process because negative counts are invalid inputs that no legitimate client should submit.

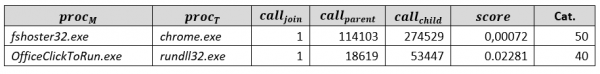

Attack 3: Inject rare process creation events unrelated to the attack

The aim of our third attack was to decrease all anomaly threshold values in the target model, such that the desired parent-child pair ends up above those values (and hence is assigned a lower anomaly category). In this experiment our aim was to determine the lowest anomaly category obtainable using the attack method (as opposed to targeting a specific anomaly score). We selected two process pairs belonging to categories 50 and 40. The pairs are shown below. Note how, in both cases, the parent-child pair has a count of 1 (extremely rare), but both processes have quite high parent and child counts.

Process pairs selected for experimenting with ‘Inject rare process creation events unrelated to the attack’ approach and their parameters in the global model

To perform this attack, we gathered 100 processes with the highest child counts and 100 processes with the highest parent counts from the global table. We built a list of the 10,000 possible pairwise combinations of these two lists, obtained PLD scores for each pair from the pre-existing trained model, and discarded all pairs with PLD scores lower than the score of our selected attack pair. We then computed maximum acceptable values for each of the remaining process pairs in order to poison the score distribution of the next global model with as many low score value pairs as possible. Since there is some difference between the two consecutive global models, we chose to reduce the values for each process creation pair that we injected by 10% to help ensure that the PLD scores of the injected pairs remained lower than the PLD score of the target process launch pair in the next global model.

For the first pair (fshoster32.exe, chrome.exe), we found 616 candidate process creation pairs available for injection. A total of 9,047 process creation events were inserted into the local model, with an average value of 15. This attack succeeded in reducing the anomaly category from 50 to 30 in the global model.

For the second pair (OfficeClickToRun.exe, rundll32.exe) we found 3,258 candidate process creations pairs for injection. A total of 345,466 process creation events were injected, the average value being around 106. This attack succeeded in reducing the anomaly category from 40 to 10 in the global model.

Achieving a drop from 50 to 30 for our first target pair was done by injecting just a few hundred process creation records, with very low counts. Since clients report around 90,000 process creation events on average during one round of training, injecting 9,047 process creation events is just a drop in the bucket, and can thus be considered stealthy. On the other hand, in our second attack, moving an anomaly category from 40 to 10 required the injection of over 300,000 process launch events – a number significantly larger than the average 90,000 events reported per client. In our initial setup, we witnessed one client reporting over 5,000,000 process launches in their local model during a 24-hour period. Using this client to perform the poisoning attack would allow us to maintain stealth.

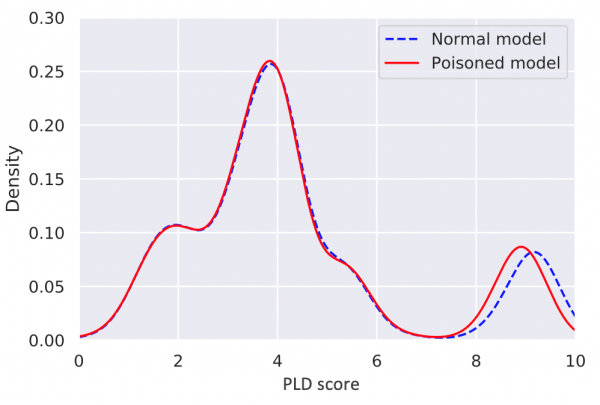

Anomaly category thresholds in our model are based on quantiles of exponentially decreasing size. In order to move the threshold for category 1 to a very low value, we would need to inject roughly 10% of the total number of process creation events into the model. Injecting around 1% would be sufficient to move a threshold for category 10, and so on. In our experimental setup, the global model contained over 22,000,000 process creation events. Hence, we would need to inject around 2,200,000 new events with low anomaly scores to move a target process pair to category 1 and around 220,000 to reach category 10. The figure below shows the score distributions of the entire model before and after one of our poisoning attacks. Note how the distributions look almost completely identical to the eye.

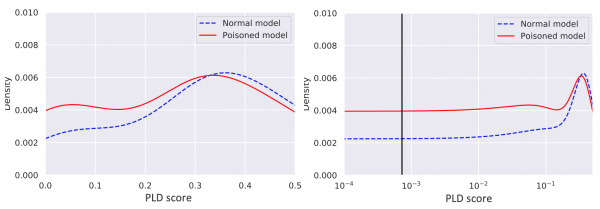

Score distribution for initial and poisoned models in the experiment with (fshoster32.exe, chrome.exe). Target category is 30. 9047 process creation events injected in the poisoned model.

If we zoom in on the region where poisoning occurs (below), we observe noticeable differences between the initial and poisoned models. Unfortunately, changes such as these can easily be caused by concept drift (naturally changing values between model updates), thus making these poisoning attacks hard to detect.

Score distribution for initial and poisoned models in the interval close to 0 in the experiment with (fshoster32.exe, chrome.exe).

Score distribution for initial and poisoned models in the interval close to 0 in the experiment with (OfficeClickToRun.exe, rundll32.exe).

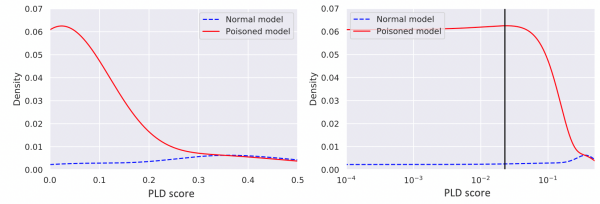

The effects of our poisoning attacks can be better observed in the figures below, where we visualize threshold values. Note that the first poisoning attack actually led to an increased density in score distributions at very small positive values (0.002 -> 0.004). By shifting all of the thresholds upwards and into a very narrow band, the desired attack vector receives an anomaly score of 10 (instead of 30, prior to the attack). Since a large number of other events receive a similar score, a security analyst may not notice this particular attack. Additionally, events that previously received scores of 40 or higher now receive much higher anomaly scores (50 or more) due to the changed threshold distribution.

Anomaly category thresholds (orange lines) in initial (left) and poisoned (right) models in the experiment with (fshoster32.exe, chrome.exe). Black line = target pair. Left: initial model has 2 thresholds (categories 60 and 50) below target pair. Right: poisoned model has 4 thresholds (categories 60, 50, 40 and 30) below target pair.

A greater change was observed in the second attack (below), where density increased by a factor of 30. While the previously illustrated change may be explained by concept drift, this one should raise eyebrows (and perhaps an automated alarm). Monitoring how a model’s thresholds change during retraining would catch such an attack.

Anomaly category thresholds (orange lines) in initial (left) and poisoned (right) models in the experiment with (OfficeClickToRun.exe, rundll32.exe). Black line = target pair. Left: initial model has 3 thresholds (categories 60, 50 and 40) below target pair. Right: poisoned model has 6 thresholds (categories 60 to 10) below target pair.

Defense recommendations

Here are some recommendations to counter model poisoning of this nature. Note that we will focus on examining the effectiveness of these suggested defenses in a future SHERPA report.

Check input format and validity: Our second simulated attack inserted negative values into a local model, that was subsequently aggregated into the global model. This approach can be easily defeated by rejecting, prior to aggregation, any local records or models that contain invalid values. When designing an online distributed training process, one should define formats and validity conditions for local models and data, listing all their parameters, properties, fields, etc. and defining acceptable ranges for those.

Normalize local models and data before aggregation: In our experimental setup, local models were aggregated into a global model by summing together raw parent-child pair values. This approach allows compromised or dishonest clients to have greater impact on the global model, since they can submit much higher values than other clients in order to influence the global model in their interest. One way to address this issue is to normalize local models or data points prior to aggregation, for instance by requiring clients to submit frequencies instead of counters. As such, the sum of the submitted values in each local model would be equal to 1, and all the clients would contribute to the global model equally.

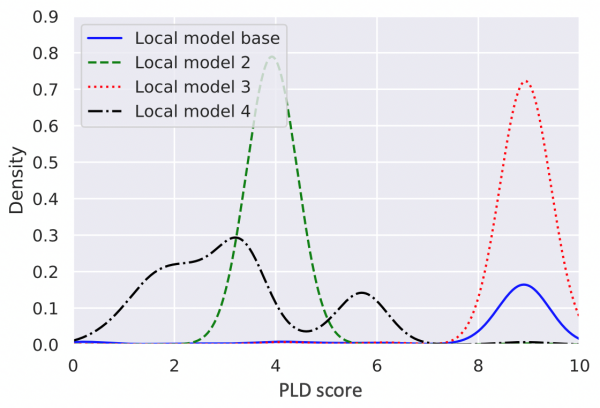

Perform outlier detection for poisoned local models and data: A common approach for detecting poisoning attacks is based on identifying local models or data points that deviate significantly from the majority of all local models or data points submitted for aggregation. A clustering algorithm can, for instance, be applied to all submitted data in order to identify outliers. This defence approach is only effective when the distributions of local models are somewhat similar, which was not the case in our experimental setup. Below is an example of four different local model distributions from our experimental setup. They are all completely different.

Score distribution for four different local models in our experiment.

Reject local models with a large distance from the global model: Another approach to detecting and removing poisoned local models is based on computing their distances to the global model. If the distance between a local model and the global model is too high, the local model is rejected. This technique only works if local model distributions are similar to the global model – again, not the case in our experiments.

Detect abnormal evolution of local models or data points over time: By monitoring the evolution of individual local models or data over time, it may be possible to detect a poisoned local model if it differs significantly from local models submitted by that client in the past. This method assumes that local models contain roughly similar distributions at successive update steps.

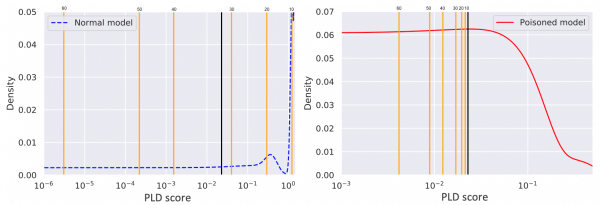

To validate this approach, we randomly selected a client. In its ‘last good’ (or normal) local model, the client reported 556 process creation pairs and 93,423 process creation events. We injected process creation events required for carrying out our first and the third poisoning attacks into that client’s local model.

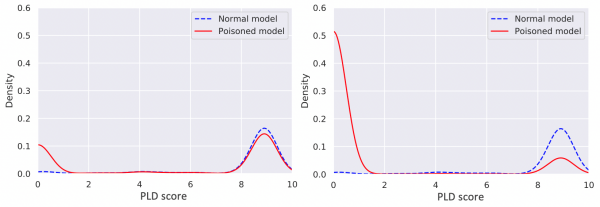

Score distributions for normal and poisoned local models in ‘increase target process creation count’attack for(postgres.exe, conhost.exe). Left: target anomaly category = 20 (13,683 process creation events injected). Right: target anomaly category = 1 (145,920 process creation events injected).

Comparing the score distributions of the normal and poisoned local models in the upper figure, we clearly see differences in low score areas. This indicates that poisoned local models can often be detected by comparing them with earlier local models from the same clients. However, the lower figure illustrates that poisoning attacks that require the injection of only small numbers of process creation events (hundreds in the considered case) are still difficult to detect with model evolution monitoring strategies.

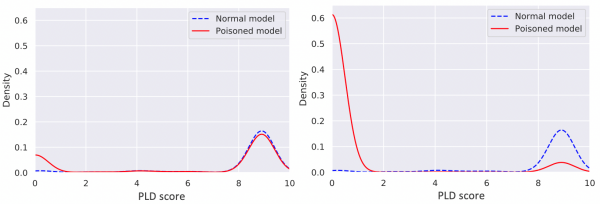

Similar observations were made in the ‘inject rare process creation events unrelated to the attack’ case, presented below. These poisoning attacks also resulted in significantly increased distribution densities in low score areas.

Score distributions for normal and poisoned local models in ‘Inject rare process creation events’ attack. Left: experiment with (OfficeClickToRun.exe, rundll32.exe), 9,047 events injected. Right: experiment with (fshoster32.exe, chrome.exe), 345,466 events injected.

As shown in our experiments, leveraging the retraining aspect of online distributed learning is a promising avenue for detecting poisoned local models (or data). Assuming that a client was a benign participant of the training in the past (e.g., prior to getting compromised by an adversary), we can likely detect its poisoned local model by comparing it with earlier local models. Note that if a client had been compromised very early on, or at the moment it started participating in distributed learning, it would be more difficult to detect the fact that it was poisoned.

Use strong client authentication (countering Sybil attacks): In this study, we simulated a single compromised client operating in a single model update round. However, a skilled adversary would likely distribute their attack across multiple compromised clients, or even fake clients. Strong client authentication can be used to prevent an adversary from creating fake clients and may increase the attack cost for the adversary (in particular, if obtaining authentication credentials requires payments, licensing, or access to a secret key for signing). Unfortunately, there exist scenarios where client authentication is undesirable or impossible, for instance, due to anonymity requirements. While some defences have been developed to detect Sybil attacks in federated learning, they are based on strong assumptions about the similarity of local models or data contributed by every Sybil client, and thus can be easily circumvented by a creative adversary.

In the context of the SHERPA project, we are developing mechanisms to detect poisoning attacks against distributed machine learning systems, including the attacks detailed in this article. The results of that research will be published in a separate report.

Conclusion

Our experiments illustrate how an adversary can successfully perform a variety of poisoning attacks against an online distributed learning system from a single compromised client, over a single update step, without knowledge or control over the training contributions of other benign clients. Our simulated attacks were hard to detect due to their relatively modest scale, and were difficult to distinguish from regular background noise and concept drift effects.

Methods for detecting and mitigating these types of poisoning attacks include:

- input validation

- normalisation of client contributions (local models or data points)

- monitoring of contributions of each client over time to detect anomalies

- strong client authentication

Some proposed defence approaches rely on the fact that local and/or global models have similar distributions, and are thus not applicable to all use cases (such as ours).

The studied poisoning approaches are simple to carry out, effective, and applicable to models used in multiple domains including recommenders on social media sites, online stores, and music and video streaming services, credit card fraud prevention systems, network intrusion detection solutions, spam filtering software, medical diagnostics systems, and fault detection mechanisms. Real-world impacts of model poisoning can vary, depending on what the system is used for, but bear in mind that these types of attacks will always degrade a model’s accuracy and prevent it from working as intended. For more detail on this subject, read this blog post (https://blog.f-secure.com/how-ai-is-already-being-poisoned-against-you/) that explores the impact of poisoning attacks against real-world services. As such, our results should be considered a strong warning for organizations developing and operating such services.

Our continued research in this area will focus on detecting and defending against the attacks detailed in this report.

This blog post summarizes our full SHERPA report, which covers this subject in a lot more detail. The report can be found here.

[1] One rare example is the Tay AI chatbot case (https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist), and it dates back to March 2016.